Nvidia GeForce RTX 3050 review: A truly modern GPU for the masses (hopefully)

At a glance

Expert’s Rating

Pros

- Great 1080p gaming with high visual settings

- 8GB of GDDR6 RAM

- Cool and quiet

- Playable ray tracing performance, especially with DLSS enabled

- Support for modern features like DLSS, Nvidia Reflex, AV1 decode, Nvidia Broadcast, PCIe Resizable BAR, and more

- No weird technical sacrifices like in Radeon RX 6500 XT

Cons

- $100 more than last generation’s xx50-class card

- No frills on EVGA XC Black model tested

- Mining-friendly configuration doesn’t bode well for price or availability on the streets; $250 price feels very optimistic

Our Verdict

Nvidia’s GeForce RTX 3050 delivers great 1080p gaming performance with modern features, including capable ray tracing chops and DLSS. It has plenty of memory and doesn’t make any unusual technical compromises, unlike AMD’s rival Radeon RX 6500 XT, but that potentially makes it a target for GPU miners—which could mean bad things for price and availability.

A year and a half into the latest generation of graphics cards—one plagued by chip shortages, logistics woes, tariffs, crypto demand, and scalpers—we’re finally starting to see the first GPUs for PC gamers on a tighter budget. And as the GeForce RTX 3050 we’re reviewing today shows, Nvidia and AMD couldn’t be going about it any more differently.

AMD landed the first strike. The Radeon RX 6500 XT arrived just last week, and AMD made some hard compromises to hit its low $199 price point. AMD’s cheap card strips out various features we’ve typically come to expect, and packs just 4GB of onboard memory with a scant 64-bit bus. That’ll help it evade the attention of crypto miners and hopefully free up supply on the streets, but AMD’s memory approach restricts the 6500 XT to 1080p gaming on Medium or High settings. Push beyond that, and performance stumbles hard.

Nvidia, on the other hand, takes the opposite route with the $250 GeForce RTX 3050. It’s essentially a traditional graphics card, using a cut-down RTX 3060 GPU with 8GB of VRAM and a standard 128-bit memory bus. This bad boy is built to run games with eye candy cranked up and those glorious real-time rays a-tracin’.

The memory configuration will likely make the RTX 3050 more attractive to miners (though it wields Nvidia’s Lite Hash Rate tech) when it launches on January 26, so we’ll have to see whether it or the Radeon RX 6500 XT wind up being more widely available on the streets. But there’s no question that the RTX 3050 absolutely spanks AMD’s GPU in the benchmark sheets. Let’s dig in.

GeForce RTX 3050 specs, features and price

The GeForce RTX 3050 uses a pared-back version of the GA106 GPU found in the RTX 3060. As such, it packs much beefier specs than the GTX 1650 Nvidia uses in the spec comparison chart below. (It’s also worth noting that the GTX 1650 launched at $150, a full $100 less than the RTX 3050.)

Nvidia

The RTX 3050 tops out at the same 1,777MHz boost clock speed as a reference spec, matching the RTX 3060’s rate. But it has fewer CUDA cores—2560, versus 3584—along with fewer ROPs and texture units. That obviously affects performance. The RTX 3050 also wields a full array of Nvidia’s dedicated second-gen RT cores to enable real-time ray tracing, and 80 third-gen tensor cores to help with DLSS, Nvidia Broadcast, and other AI-infused tasks. The Radeon RX 6500 XT ostensibly includes ray tracing hardware of its own, but that GPU is so stripped-down, you can’t practically enable the cutting-edge lighting feature in games. The RTX 3050, on the other hand, handles ray tracing like a champ, especially in games that also support Nvidia’s Deep Learning Super Sampling (DLSS) tech.

Nvidia also gave the RTX 3050 a standard memory configuration that will have no problem playing games at 1080p today or in the future. It comes with 8GB of GDDR running at 14Gbps, all over a 128-bit bus. That’s good for 224GB per second of memory bandwidth—slower than the RTX 3060’s 360GB/s over its wider bus, but still a-ok for the 1080p gaming this card is intended for. The extra memory capacity also helps with ray tracing, since flipping on that feature places much more stress on a graphics card’s VRAM.

Brad Chacos/IDG

AMD stripped out various decoding and encoding functions from its budget GPU, while cutting the display outputs on the Radeon RX 6500 XT down to just singular HDMI and DisplayPort connections. That’s not the case with the RTX 3050, which comes with all the modern encode/decode functionality of other RTX 30-series GPUs (including AV1 support) and a full complement of visual outputs. The EVGA RTX 3050 XC Black model we’re reviewing today comes with a single HDMI 2.1 port and a trio of DisplayPorts, along with a 8-pin power connector.

Since the RTX 3050 comes with all the hardware and software as its other Nvidia siblings, just in pared-down form, that means it also supports Nvidia’s wide ecosystem of killer software features. Nvidia Broadcast, latency-lowering Nvidia Reflex, ShadowPlay, NVENC encoding, DLSS, Nvidia Image Scaling, it all works. Hallelujah.

As with the RTX 3060, Nvidia isn’t rolling out one of its snazzy Founders Edition models for the RTX 3050. Instead, it’s relying on custom cards from partners to carry the load in today’s scorching-hot graphics card market. The EVGA GeForce RTX 3050 XC Black sent over for testing largely mirrors the design of the RTX 3060 XC Black we benchmarked before. This dual-slot graphics card sticks to reference specs and eschews cost-adding extras like a dual-BIOS switch or a backplate to help it land at Nvidia’s $249 price point for the RTX 3050. The lack of a backplate makes the EVGA XC Black a bit of an eyesore in an era when backplates have largely become the norm, but it’s otherwise inoffensive aesthetically, with a blacked-out design.

Brad Chacos/IDG

EVGA mistakenly loaded review samples with a slightly faster BIOS intended for a step-up overclock model. Our unit is rated for a 1845MHz boost clock, opposed to the 1777MHz reference spec. That should only move the performance needle by a couple percentage points, EVGA says, but production units being shipped to store shelves have the correct BIOS installed, with the correct speeds.

If you want to put the dual-axial fans to more work in a bid to squeeze even more performance out of the EVGA RTX 3050 XC Black, the company’s sublime Precision X1 GPU management software contains everything you need to monitor and overclock the card. The slickly designed program is one of our favorites.

And now the stage is set. Let’s get to the benchmarks.

Our test system

We test graphics cards on an AMD Ryzen 5000-series test rig in order to benchmark the effect of PCIe 4.0 support on modern GPUs, as well as the performance-boosting AMD Smart Access Memory and Nvidia Resizable BAR features (which are both based on the same underlying PCIe standard). Currently, we’re testing it on an open bench with AMD’s Wraith Max air cooler. Most of the hardware was provided by the manufacturers, but we purchased the storage ourselves.

- AMD Ryzen 5900X, stock settings

- AMD Wraith Max cooler

- MSI Godlike X570 motherboard

- 32GB G.Skill Trident Z Neo DDR4 3800 memory

- EVGA 1200W SuperNova P2 power supply

- 1TB SK Hynix Gold S31 SSD

We’re comparing the $250 EVGA GeForce RTX 3050 XC Black against its EVGA RTX 3060 XC Black cousin, and yesteryear’s RTX 2060 Founders Edition. On the AMD side of things, we’ve included the RTX 3050’s direct competitor, the $199 XFX Radeon RX 6500 XT Qick 210, along with the step-up XFX Radeon RX 6600 Swft 210 and Asus ROG Strix Radeon RX 6600 XT, plus the Radeon RX 5600 XT and 5700 from last generation.

Brad Chacos/IDG

Again, EVGA mistakenly loaded review samples of the RTX 3050 XC Black with a slightly faster BIOS intended for a step-up overclock model. Our unit is rated for a 1845MHz boost clock, opposed to the 1777MHz reference spec. Production units being shipped to retail shelves have the correct BIOS installed, with the correct speeds. The performance results shown below may be a frame or three faster than what you’d get from this card in real life as a result, but there should be nothing tangibly different in the gaming experience.

We test a variety of games spanning various engines, genres, vendor sponsorships (Nvidia, AMD, and Intel), and graphics APIs (DirectX 11, DX12, and Vulkan). Each game is tested using its in-game benchmark at the highest possible graphics presets unless otherwise noted, with VSync, frame rate caps, real-time ray tracing or image upsampling effects, and FreeSync/G-Sync disabled, along with any other vendor-specific technologies like FidelityFX tools or Nvidia Reflex. PCIe Resizable BAR and AMD’s Smart Access Memory were also disabled for testing. We’ve enabled temporal anti-aliasing (TAA) to push these cards to their limits. We run each benchmark at least three times and list the average result for each test.

You’ll see the Radeon RX 6500 XT lagging hard in several of these tests, but the reality is even uglier than it seems. AMD’s budget GPU also suffered from severe lag spikes in several games thanks to its barely-there 4GB of RAM and scant 64-bit bus. It runs fine at 1080p Medium or High settings, but the maxed-out graphics options used in this testing are simply too much for it, giving the RTX 3050 the clear edge.

Gaming performance benchmarks

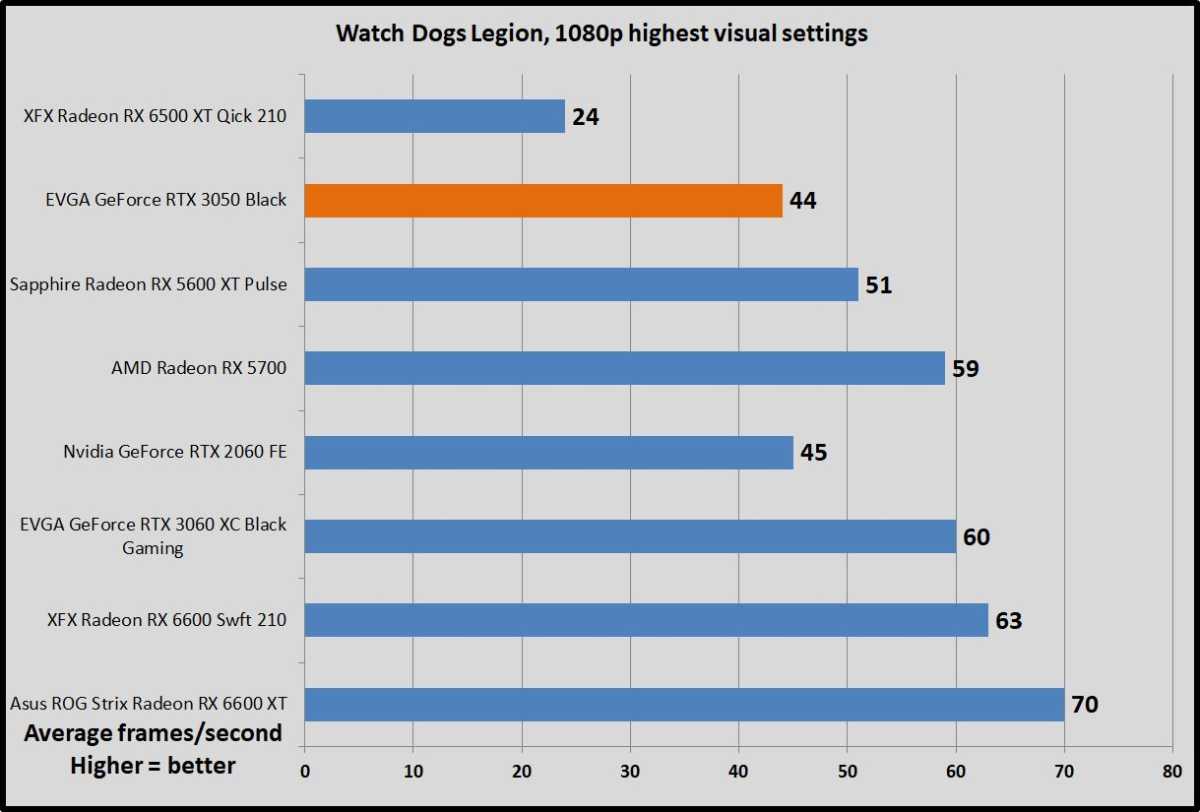

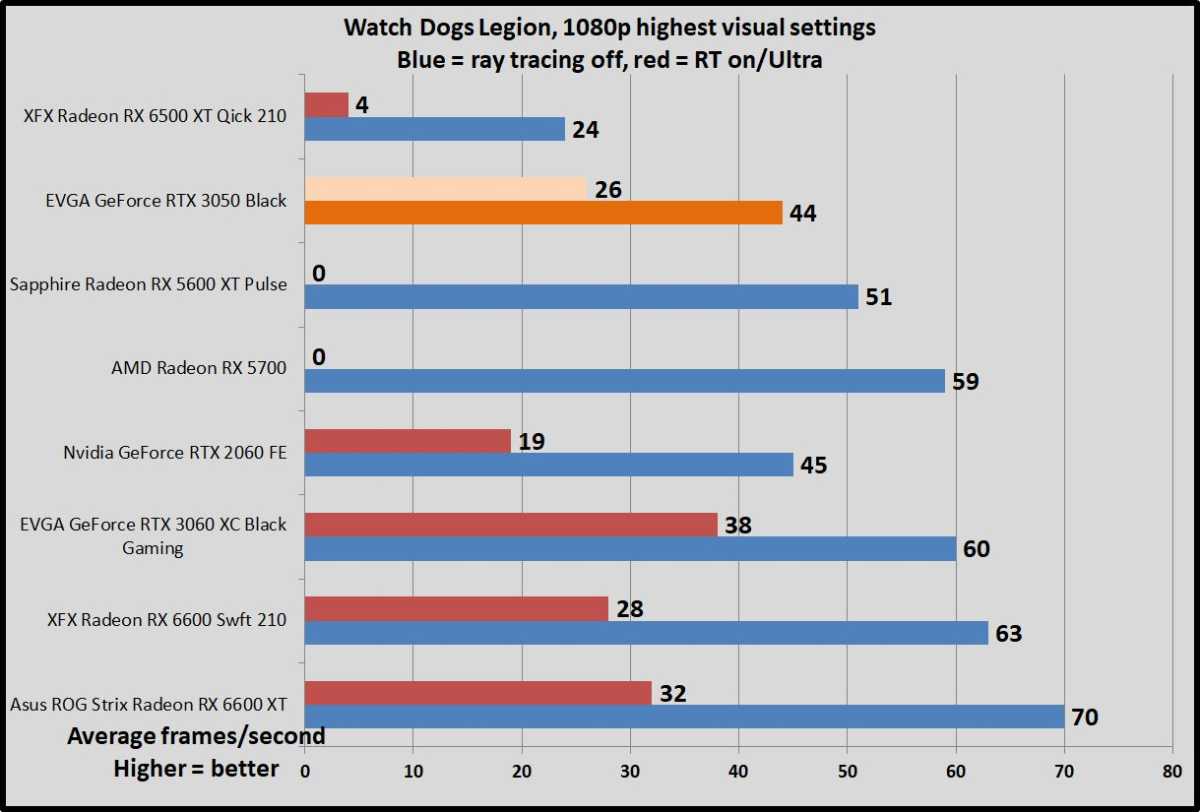

Watch Dogs: Legion

Watch Dogs: Legion is one of the first games to debut on next-gen consoles. Ubisoft upgraded its Disrupt engine to include cutting-edge features like real-time ray tracing and Nvidia’s DLSS. We disable those effects for this testing, but Legion remains a strenuous game even on high-end hardware with its optional high-resolution texture pack installed.

Brad Chacos/IDG

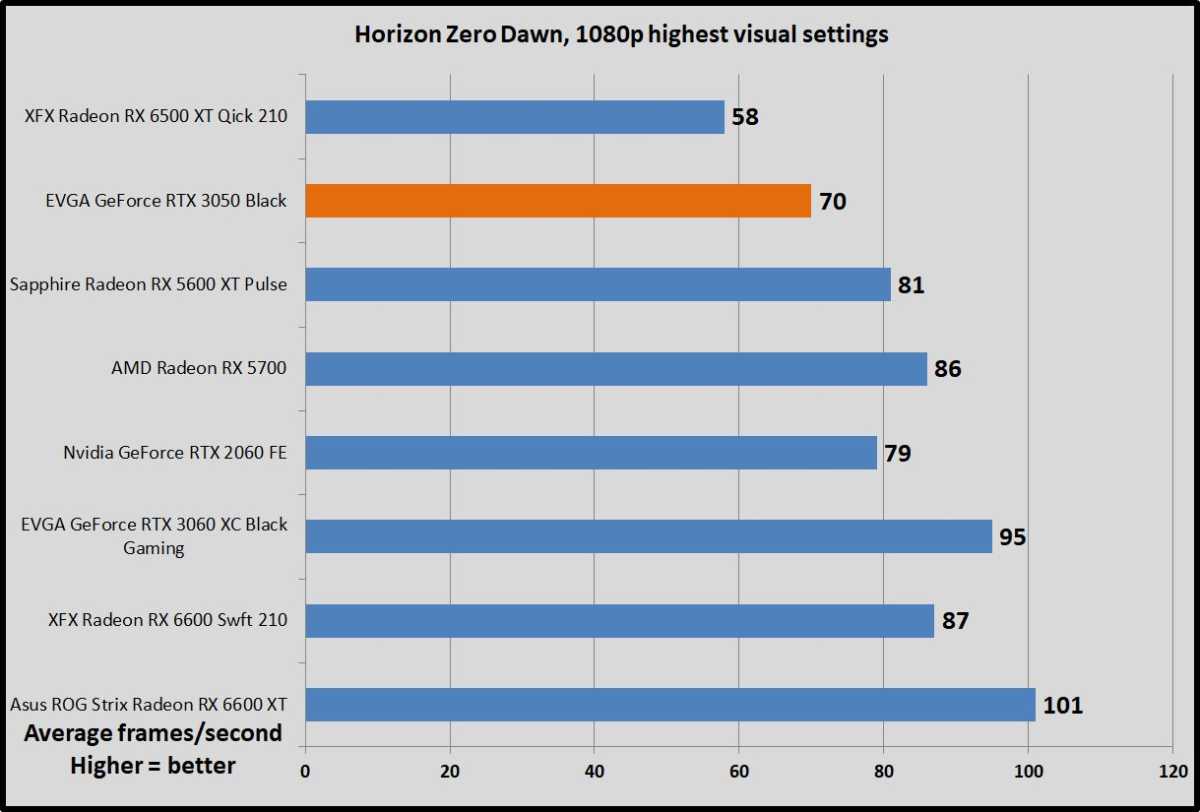

Horizon Zero Dawn

Yep, PlayStation exclusives are coming to the PC now. Horizon Zero Dawn runs on Guerrilla Games’ Decima engine, the same engine that powers Death Stranding.

Brad Chacos/IDG

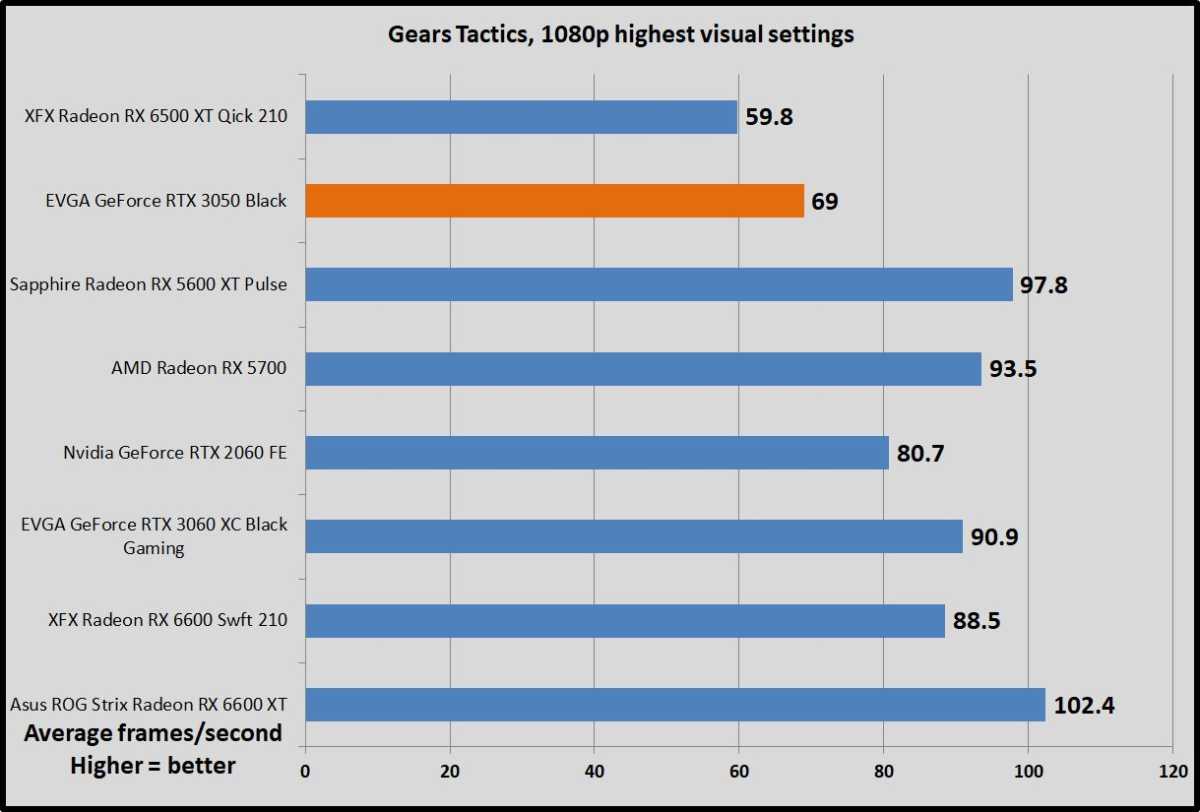

Gears Tactics

Gears Tactics puts it own brutal, fast-paced spin on the XCOM-like genre. This Unreal Engine 4-powered game was built from the ground up for DirectX 12, and we love being able to work a tactics-style game into our benchmarking suite. Better yet, the game comes with a plethora of graphics options for PC snobs. More games should devote such loving care to explaining what flipping all these visual knobs mean.

You can’t use the presets to benchmark Gears Tactics, as it intelligently scales to work best on your installed hardware, meaning that “Ultra” on one graphics card can load different settings than “Ultra” on a weaker card. We manually set all options to their highest possible settings.

Brad Chacos/IDG

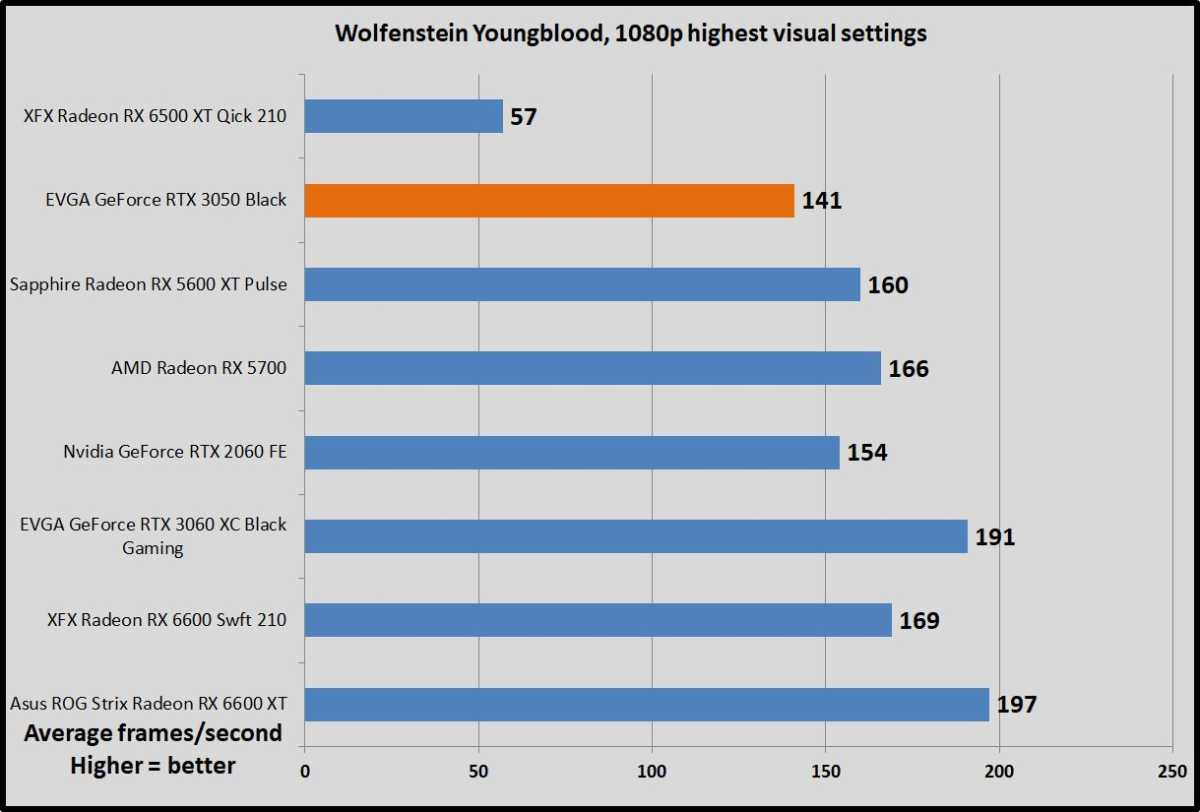

Wolfenstein: Youngblood

Wolfenstein: Youngblood is more fun when you can play cooperatively with a buddy, but it’s a fearless experiment—and an absolute technical showcase. Running on the Vulkan API, Youngblood achieves blistering frame rates, and it supports all sorts of cutting-edge technologies like ray tracing, DLSS 2.0, HDR, GPU culling, asynchronous computing, and Nvidia’s Content Adaptive Shading. The game includes a built-in benchmark with two different scenes; we tested Riverside.

Brad Chacos/IDG

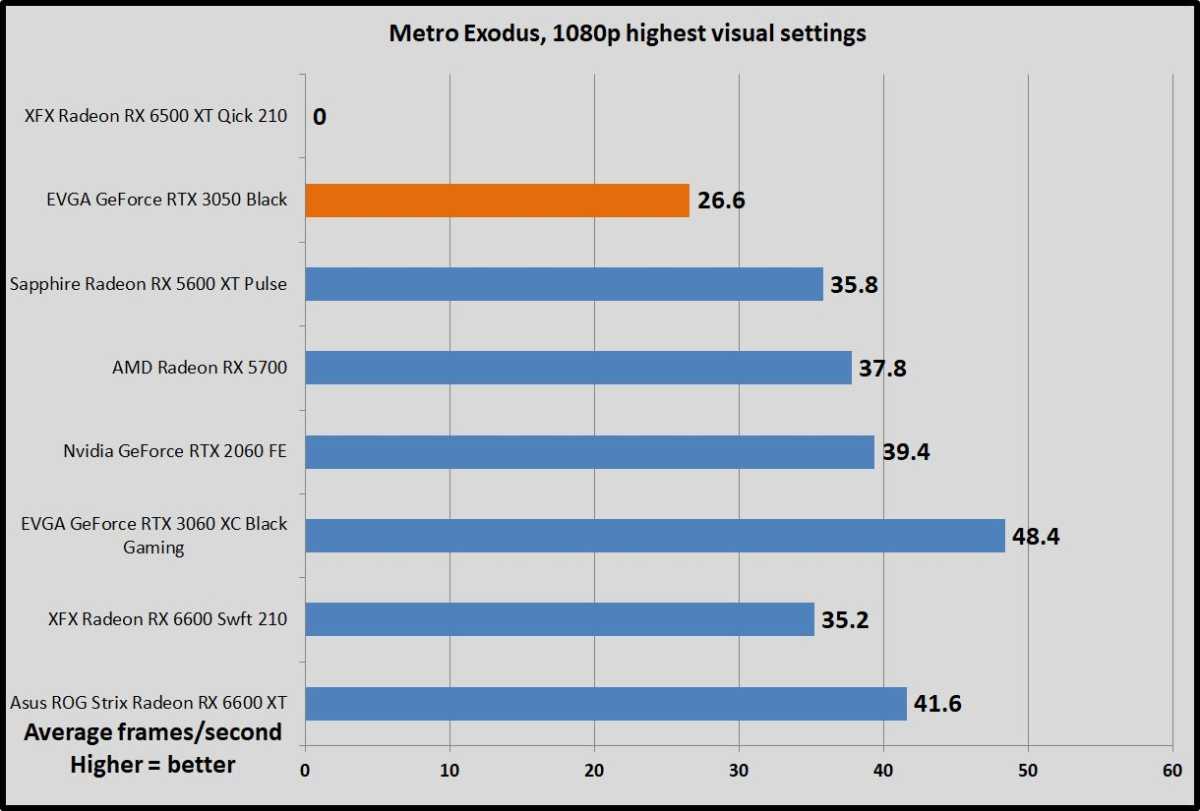

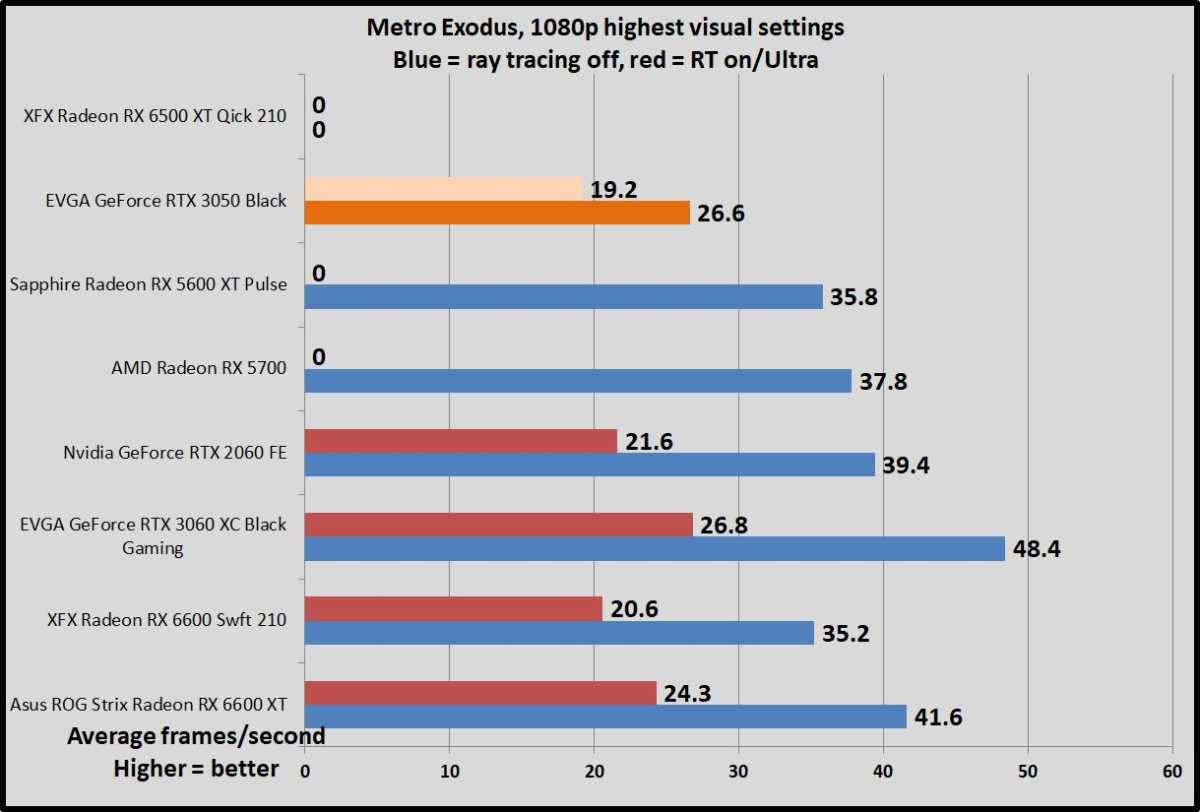

Metro Exodus

One of the best games of 2019, Metro Exodus remains one of the best-looking games around, too. The latest version of the 4A Engine provides incredibly luscious, ultra-detailed visuals, with one of the most stunning real-time ray tracing implementations released yet. The Extreme graphics preset we benchmark can melt even the most powerful modern hardware, as you’ll see below, though the game’s Ultra and High presets still look good at much higher frame rates. Bumping the settings down to “just” Ultra essentially doubles frame rates.

We test in DirectX 12 mode with ray tracing, Hairworks, and DLSS disabled. The Radeon RX 6500 XT refused to run at our test settings whatsoever, hence the zero score.

Brad Chacos/IDG

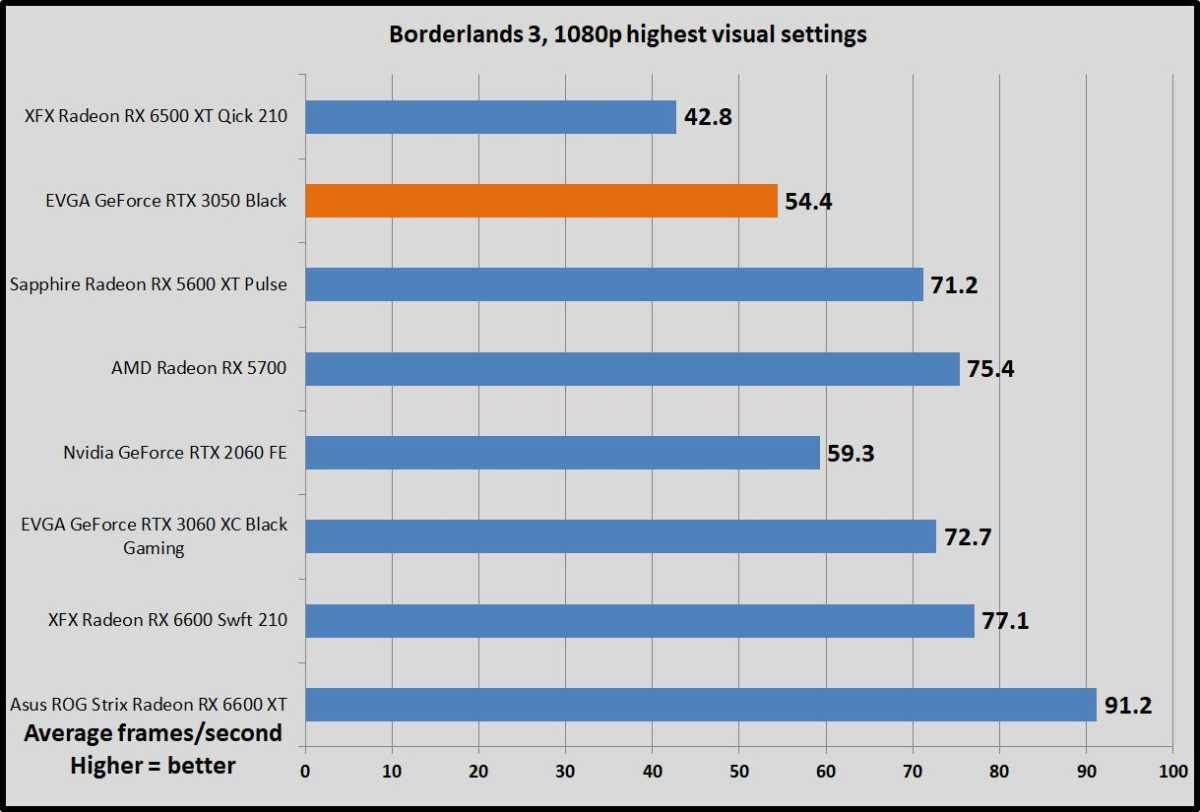

Borderlands 3

Borderlands is back! Gearbox’s game defaults to DX12, so we do as well. It gives us a glimpse at the ultra-popular Unreal Engine 4’s performance in a traditional shooter. This game tends to favor AMD hardware.

Brad Chacos/IDG

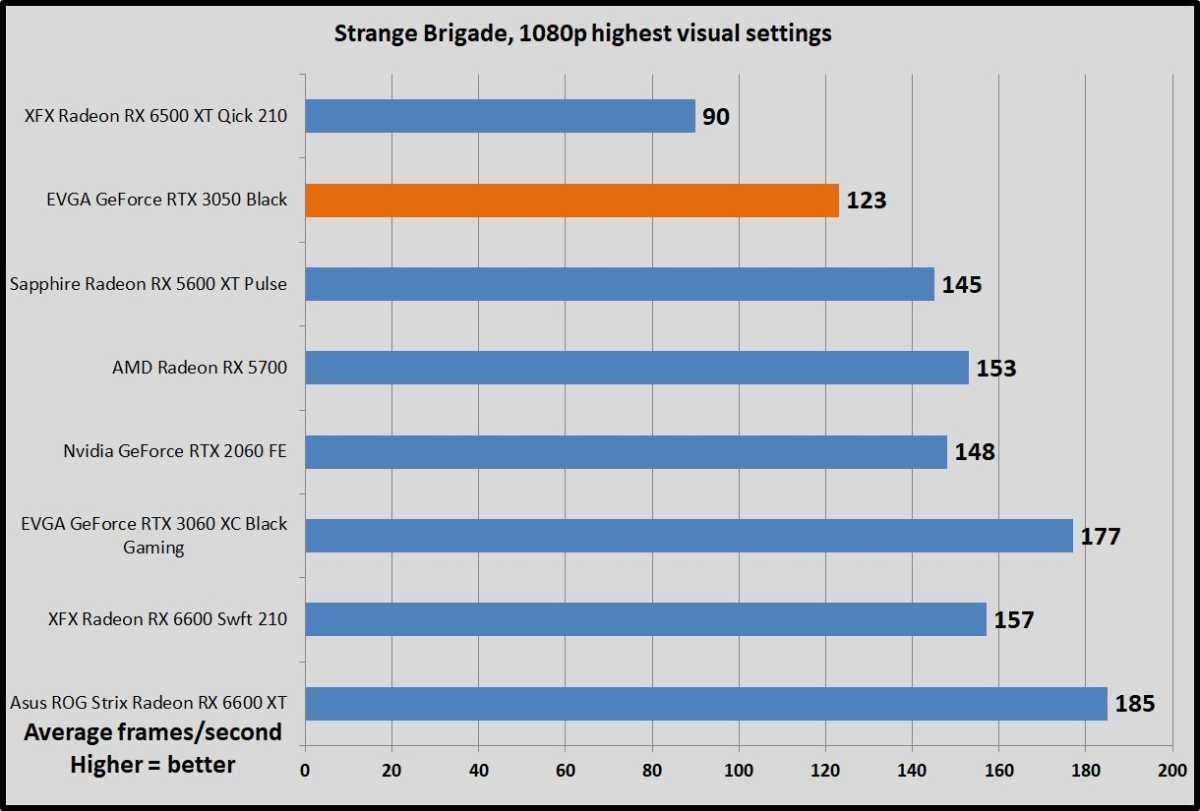

Strange Brigade

Strange Brigade is a cooperative third-person shooter where a team of adventurers blasts through hordes of mythological enemies. It’s a technological showcase, built around the next-gen Vulkan and DirectX 12 technologies and infused with features like HDR support and the ability to toggle asynchronous compute on and off. It uses Rebellion’s custom Azure engine. We test using the Vulkan renderer, which is faster than DX12.

Brad Chacos/IDG

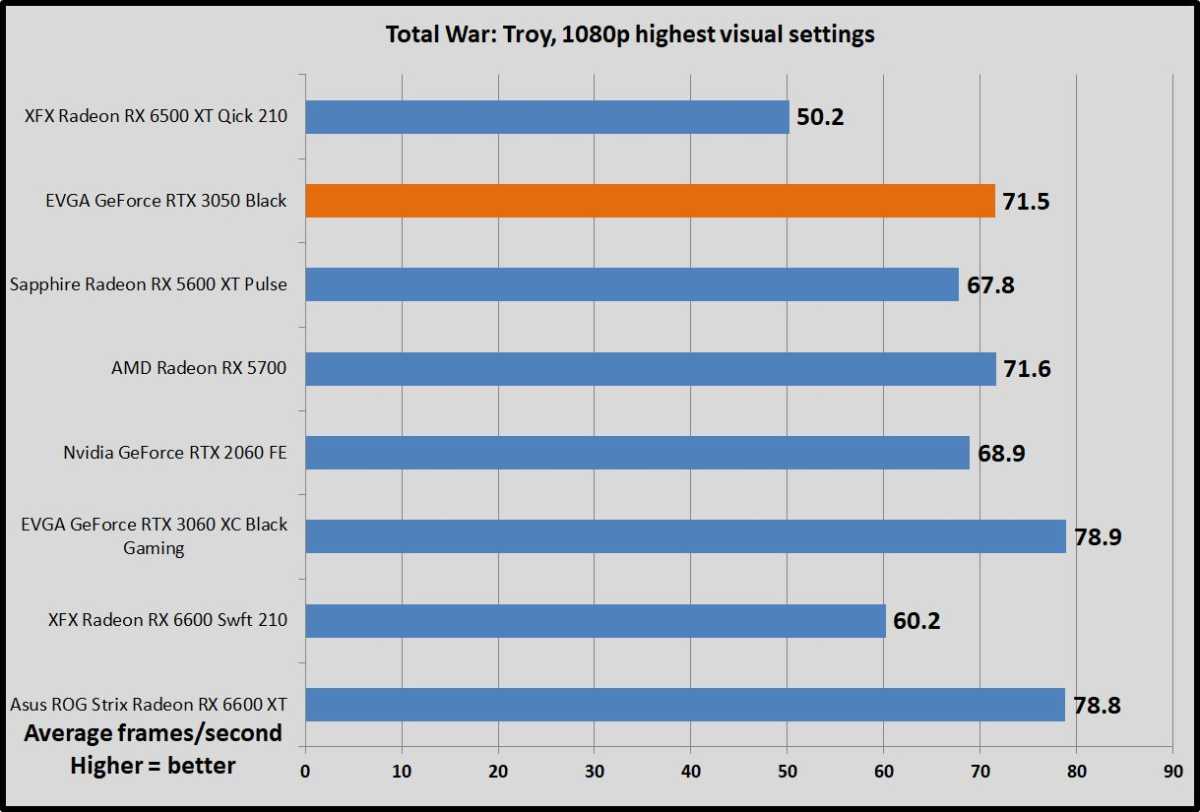

Total War: Troy

The latest game in the popular Total War saga, Troy was given away free for its first 24 hours on the Epic Games Store, moving over 7.5 million copies before it went on proper sale. Total War: Troy is built using a modified version of the Total War: Warhammer 2 engine, and this DX11 title looks stunning for a turn-based strategy game. We test the more intensive battle benchmark.

Brad Chacos/IDG

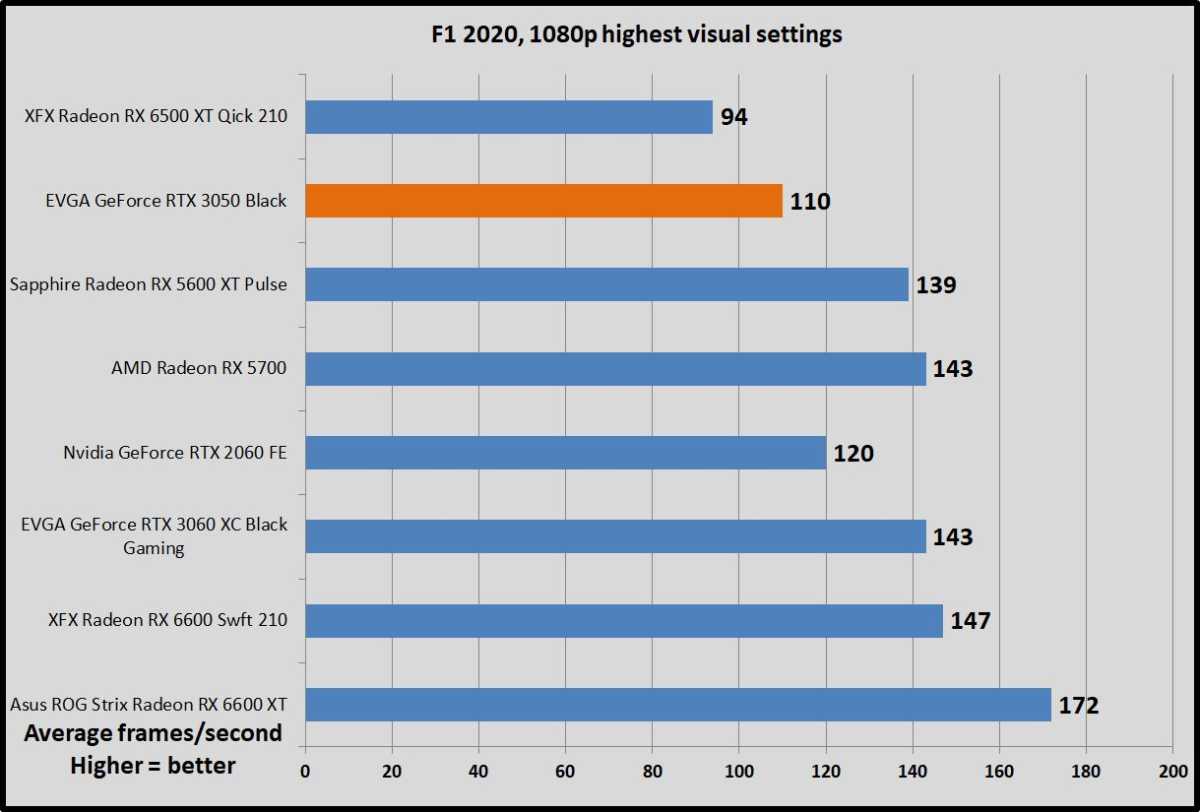

F1 2020

F1 2020 is a gem to test, supplying a wide array of both graphical and benchmarking options, making it a much more reliable (and fun) option that the Forza series. It’s built on the latest version of Codemasters’ buttery-smooth Ego game engine, complete with support for DX12 and Nvidia’s DLSS technology. We test two laps on the Australia course, with clear skies on and DLSS off.

Brad Chacos/IDG

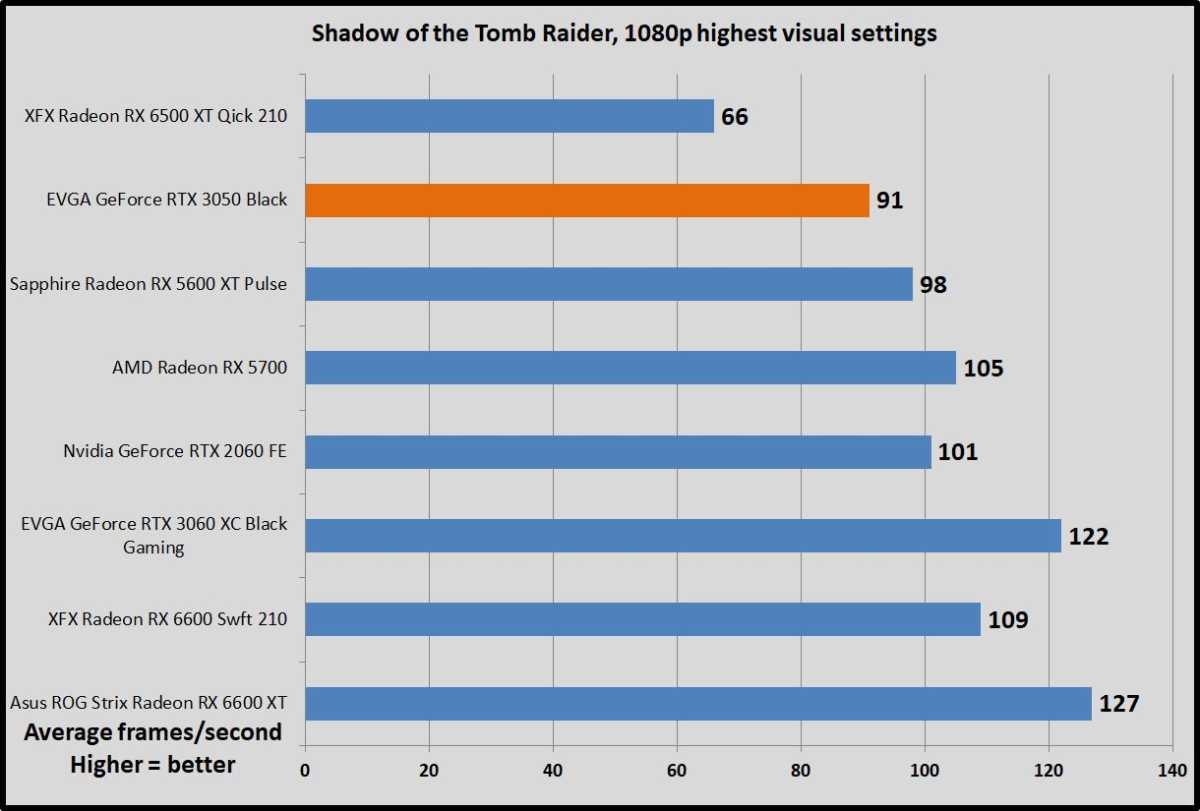

Shadow of the Tomb Raider

Shadow of the Tomb Raider concludes the reboot trilogy, and it’s still utterly gorgeous. Square Enix optimized this game for DX12 and recommends DX11 only if you’re using older hardware or Windows 7, so we test with DX12. Shadow of the Tomb Raider uses an enhanced version of the Foundation engine that also powered Rise of the Tomb Raider and includes optional real-time ray tracing and DLSS features.

Brad Chacos/IDG

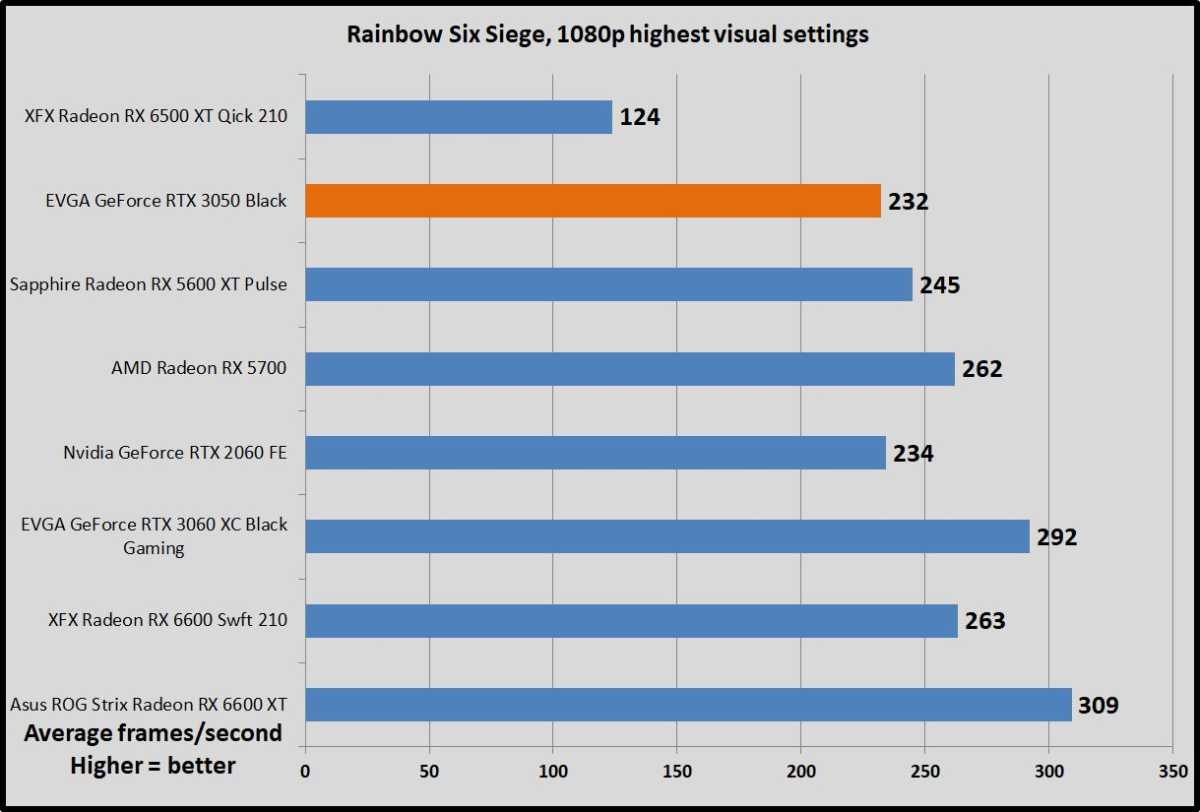

Rainbow Six Siege

Rainbow Six Siege still dominates the Steam charts years after its launch, and Ubisoft supports it with frequent updates and events. The developers have poured a ton of work into the game’s AnvilNext engine over the years, eventually rolling out a Vulkan version of the game that we use to test. By default, the game lowers the render scaling to increase frame rates, but we set it to 100 percent to benchmark native rendering performance on graphics cards. Even still, frame rates soar.

Brad Chacos/IDG

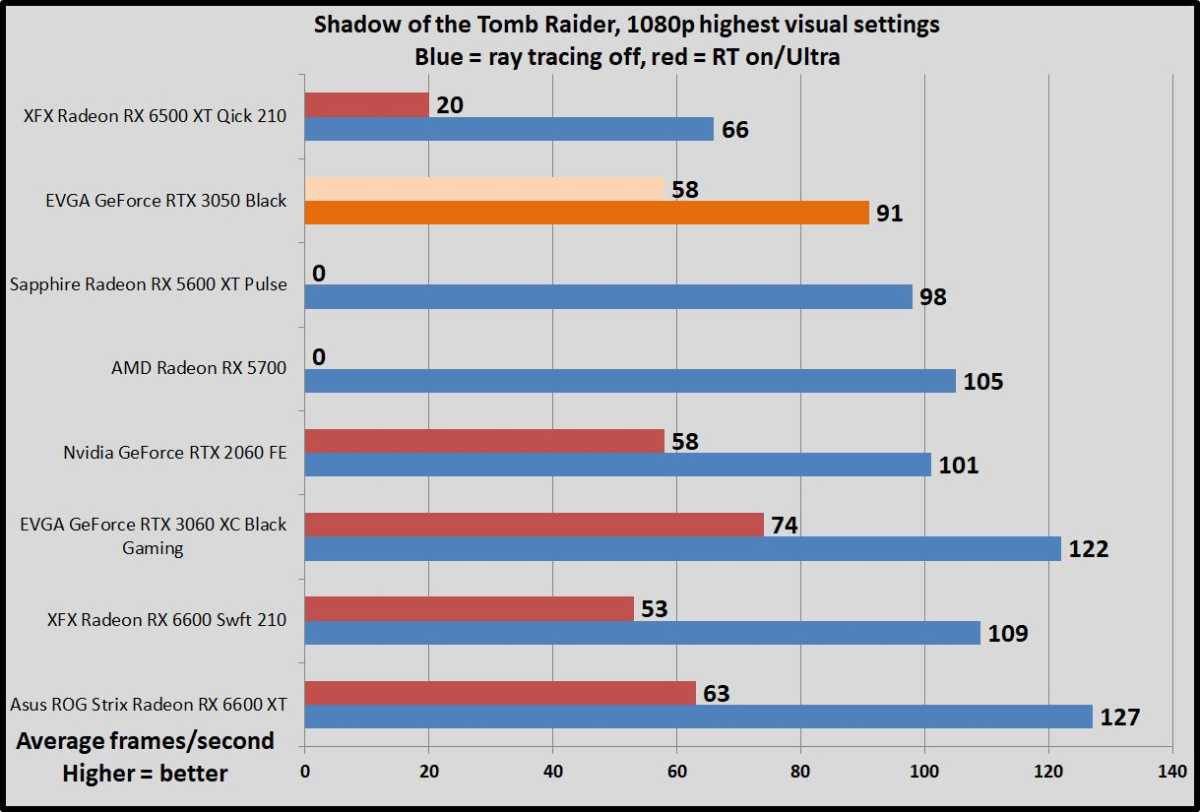

Ray tracing performance

We also benchmarked the GeForce RTX 3050 in a handful of titles that support cutting-edge real-time ray tracing effects. While the newer Radeon RX 6000-series GPUs now support ray tracing, older AMD models don’t.

Nvidia holds a key advantage in ray-traced games thanks to its Deep Learning Super Sampling technology (DLSS), which leverages AI tensor cores embedded in RTX GPUs to internally render games at a lower resolution, then up-res them to your desired resolution using machine learning to fill in the gaps. DLSS 2.0 works like black magic and gives Nvidia a strong lead in ray-tracing performance. Without it, you usually can’t run ray-traced games above 1080p resolution.

AMD offers its own FidelityFX Super Resolution to achieve the same basic function, but it uses different underlying technology with no reliance on AI or dedicated hardware. Both DLSS and FSR only work in games that support them, however, so our benchmarks below only show performance with ray tracing enabled but no DLSS or FSR. It wouldn’t be fair to AMD, as these games all support DLSS alone. Flip those features on in compatible games and you’ll see frame rates soar. Testing without upsampling active also gives us a glimpse into the raw ray tracing performance chops for these GPUs.

We plan to expand our ray-traced game suite soon, but for now we’re giving Watch Dogs: Legion, Metro: Exodus, and Shadow of the Tomb Raider a whirl to put the capabilities to the test, with ray tracing options set to Ultra in each title. Legion packs ray-traced reflections, Tomb Raider includes ray-traced shadows, and Metro features more strenuous (and mood-enhancing) ray-traced global illumination. Spoiler: It doesn’t go well for the $199 Radeon RX 6500 XT and its unusual technical configuration.

Let’s kick things off with Shadow of the Tomb Raider’s ray-traced shadows.

Brad Chacos/IDG

Metro: Exodus uses ray tracing for global illumination. The Radeon RX 6500 XT refused to run at our test settings, hence the zero score. We test at maxed-out settings, but dropping the visual preset down from “Extreme” to “Ultra” and flipping on DLSS would greatly improve performance in this game from a practical standpoint.

Brad Chacos/IDG

Watch Dogs: Legion hammers your system regardless of whether you have ray tracing on, at least with the high-resolution texture pack installed. Activating the ray-traced reflections exacerbates the issue. Just ask the Radeon RX 6500 XT.

Brad Chacos/IDG

Bottom line? While the inclusion of ray accelerators in the Radeon RX 6500 XT gave AMD the chance to crow about being the first sub-$200 graphics card to support ray tracing, our tests show that you wouldn’t actually want to game on that card with ray tracing on. The GeForce RTX 3050 runs laps around its rival.

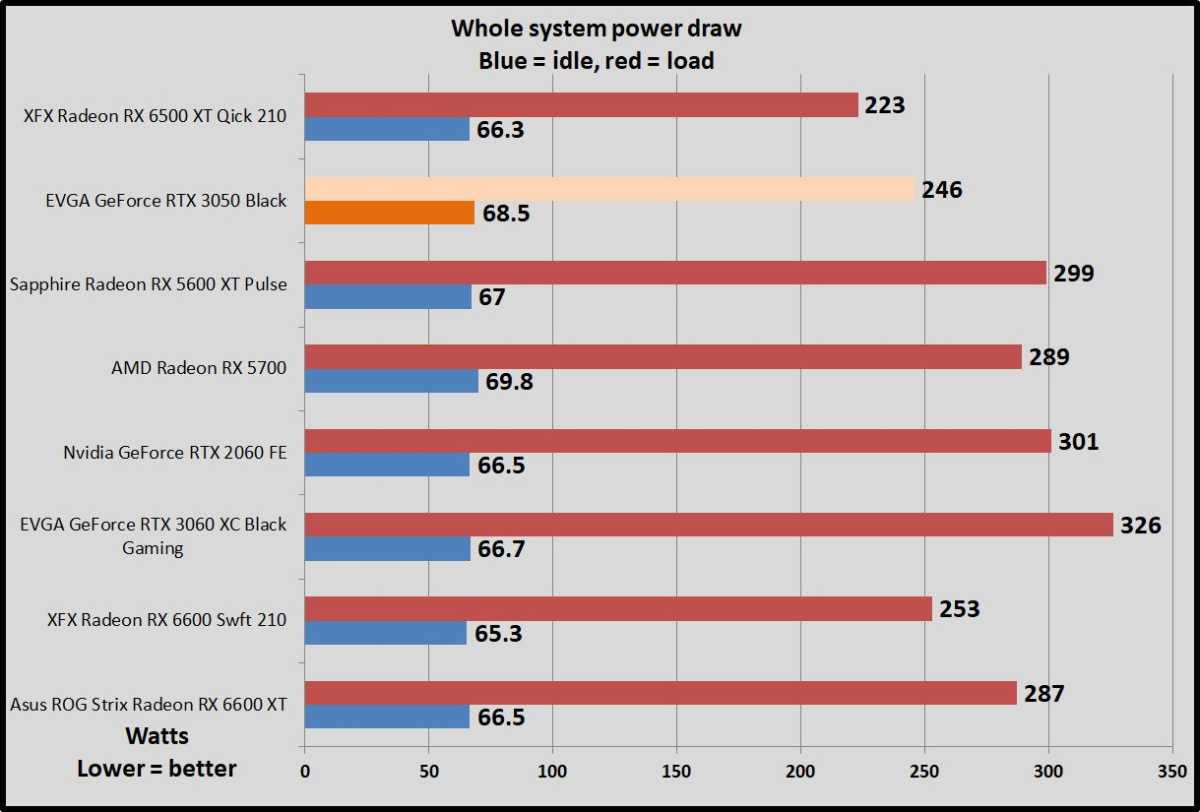

Power draw, thermals, and noise

We test power draw by looping the F1 2020 benchmark at 4K for about 20 minutes after we’ve benchmarked everything else and noting the highest reading on our Watts Up Pro meter, which measures the power consumption of our entire test system. The initial part of the race, where all competing cars are onscreen simultaneously, tends to be the most demanding portion.

This isn’t a worst-case test; this is a GPU-bound game running at a GPU-bound resolution to gauge performance when the graphics card is sweating hard. If you’re playing a game that also hammers the CPU, you could see higher overall system power draws. Consider yourself warned.

Brad Chacos/IDG

No complaints here. The GeForce RTX 3050 simply doesn’t need a lot of power to do work. Nvidia’s Ampere architecture isn’t quite as efficient as AMD’s rival RDNA 2 design, as peering closely at this results indicate, but it doesn’t matter this far down the GPU stack.

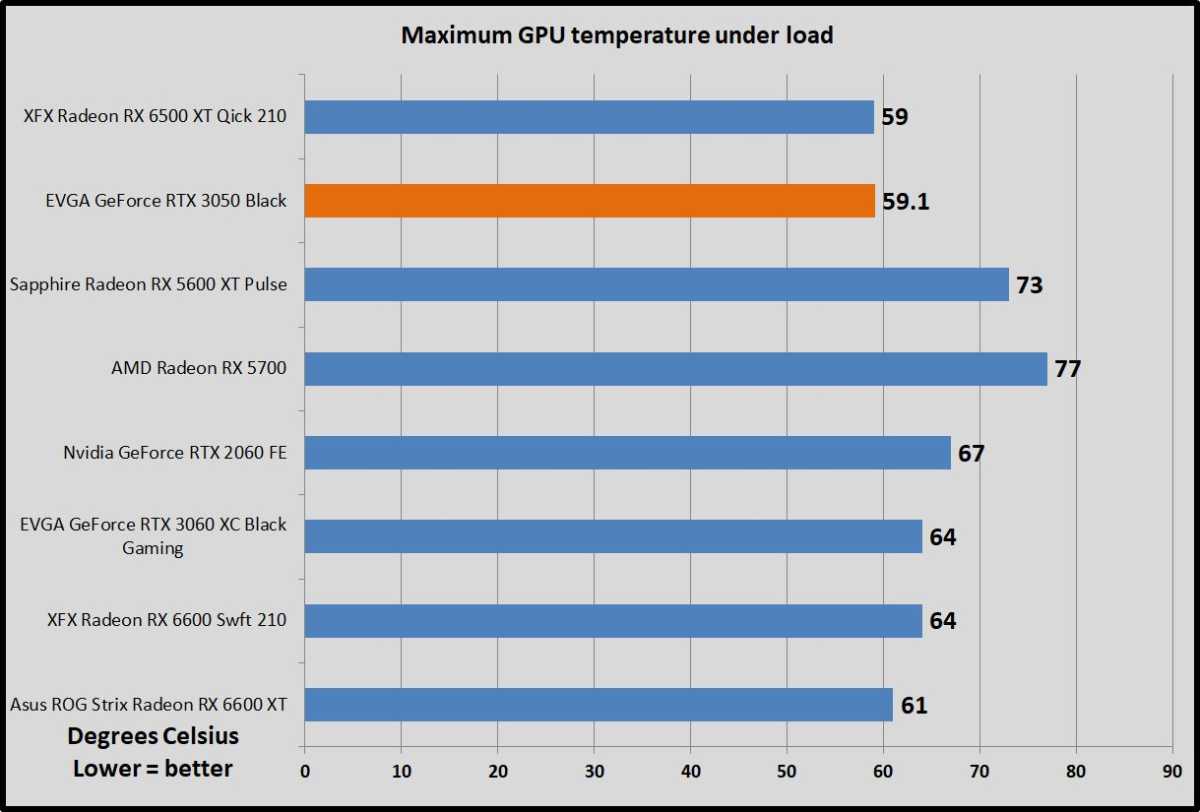

We test thermals by leaving GPU-Z open during the F1 2020 power draw test, noting the highest maximum temperature at the end.

Brad Chacos/IDG

Again, no complaints here. EVGA’s XC Black cooler may lack frills, but it sure gets the job done, and it stays nice and quiet (though not quite inaudible) doing it.

Should you buy the GeForce RTX 3050?

If you can find the GeForce RTX 3050 for close to its $250 MSRP, absolutely. In today’s market it’d still be a relative steal at $300 to $350 (sigh), even though the GTX 1650 launched at $150, and the 3050 is only a bit faster than the GTX 1660 Ti that launched at $280 last generation (and is currently selling for $400 to $475 used on Ebay, double sigh). The GTX cards lacked ray tracing or DLSS capabilities, however.

We’re not in 2019 anymore though. The GeForce RTX 3050 is a great 1080p graphics card in these trying times, capable of chewing through games at 60-plus frames per second or higher at 1080p resolution even with all visual bells and whistles usually enabled. (You may need to bump some settings down to High in especially strenuous games.) It absolutely smashes AMD’s $199 rival Radeon RX 6500 XT. That goes doubly so in ray-traced games, where the AMD card struggles to achieve frame rates in the single digits without the help of image upsampling. Gross. The GeForce RTX 3050’s ample 8GB memory buffer and support for killer Nvidia features like Reflex, Broadcast, and DLSS only sweeten the pot. The GeForce RTX 3050 delivers everything you’d want from a modern 1080p graphics card, from fast frame rates to fully loaded features.

Brad Chacos/IDG

But what PC gamers really want is to be able to buy graphics cards at sane prices. That hasn’t happened in well over a year. The jury remains out on whether Nvidia will be able to keep the RTX 3050 in better supply than its other GPUs over the weeks and months to come, but I’m less optimistic here than I am with AMD’s Radeon RX 6500 XT.

The GeForce RTX 3050 runs laps around AMD’s offering, but the severe compromises AMD made while building the Radeon RX 6500 XT means it has a chance of evading the attention of crypto miners, while its ultra-tiny GPU die also lets AMD pump out a lot of chips. The GeForce RTX 3050, on the other hand, sticks to a standard memory configuration that can be used to mine Ethereum, and uses a cut-down version of the big GA106 die found in the RTX 3060. Yes, crypto prices have plummeted in recent days and Nvidia equipped the RTX 3050 with anti-mining Lite Hash Rate technology, but that’s been beaten before. And the RTX 3050’s GPU is over 2.5x larger than the Radeon’s die, which means AMD can squeeze many, many more chips out of a wafer.

We’ll see it how it goes. If the RTX 3050 disappears from retailers and pops up on Ebay for 1.5x to 2x its MSRP like every other modern GPU has, it’s a lot less appealing. But if you can score one for $250 to $300 in today’s wild market, snatch it up pronto. There’s nothing else in this price range—new or used—that can hang with it, especially the Radeon RX 6500 XT.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.