A new approach that could improve how robots interact in conversational groups

To effectively interact with humans in crowded social settings, such as malls, hospitals, and other public spaces, robots should be able to actively participate in both group and one-to-one interactions. Most existing robots, however, have been found to perform much better when communicating with individual users than with groups of conversing humans.

Hooman Hedayati and Daniel Szafir, two researchers at University of North Carolina at Chapel Hill, have recently developed a new data-driven technique that could improve how robots communicate with groups of humans. This method, presented in a paper presented at the 2022 ACM/IEEE International Conference on Human-Robot Interaction (HRI ’22), allows robots to predict the positions of humans in conversational groups, so that they do not mistakenly ignore a person when their sensors are fully or partly obstructed.

“Being in a conversational group is easy for humans but challenging for robots,” Hooman Hedayati, one of the researchers who carried out the study, told TechXplore. “Imagine that you are talking with a group of friends, and whenever one of your friends blinks, she stops talking and asks if you are still there. This potentially annoying scenario is roughly what can happen when a robot is in conversational groups.”

One of the reasons why many robots occasionally misbehave while participating in a group conversation is that their actions heavily rely on data collected by their sensors (i.e., cameras, depth sensors, etc.). Sensors, however, are prone to errors, and can sometimes be obstructed by sudden movements and obstacles in the robot’s surroundings.

“If the robot’s camera is masked by an obstacle for a second, similarly to when people blink, the robot might not see that person, and as result, it ignores the user,” Hedayati explained.

“Based on my experience, users find these misbehaviors disturbing. The key goal of our recent project was to help robots detect and predict the position of an undetected person within the conversational group.”

To predict the position of people in a conversational group, Hedayati and Szafir first developed an algorithm that checks a robot’s beliefs about who is part of the group and who is not. This algorithm can detect a robot’s errors (i.e., if it is ignoring the existence of one or more people in a conversational group). Subsequently, it predicts the position of the undetected user/users by analyzing available data.

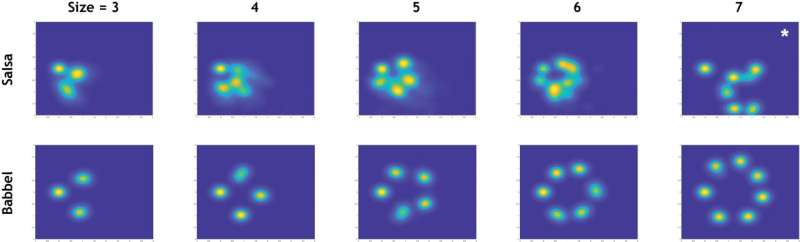

“Our approach is based on one of our past observations,” Hedayati explained. “Specifically, while we were cleaning the ‘Babble dataset’ (a human-human conversational groups dataset), we discovered that people tend to stay in predefined positions relative to each other. This means if we know the position of all people in a conversational group except for one, we can predict his/her position.”

The technique developed by Hedayati and Szafir was trained on a series of existing datasets, containing annotated footage of groups of human users conversing with each other. By analyzing the positions of other speakers in a group, it can accurately predict the position of an undetected user.

“We showed that we can model human behaviors for robots so they have a better understanding of the dynamics of conversational groups,” Hedayati said.

In the future, the new approach introduced by this team of researchers could help to enhance the conversational abilities of both existing and newly developed robots. This might in turn make them easier to implement in large public spaces, including malls, hospitals, and hospitals.

“We are dedicated to improving the human-robot conversational groups and there are many interesting open problems in this field (e.g., how to detect who is the active speaker, where robots should stand in a group, how to join a group, etc.),” Hedayati added. “The next step for us will be to improve the gaze behavior of robots in a conversational group. People find robots with a better gaze behavior more intelligent (e.g., robots that know who is talking and looking at him/her). We want to improve the gaze behavior of robots and make the human-robot conversational group more enjoyable for humans.”

PufferBot: A flying robot with an expandable body

Hooman Hedayati and Daniel Szafir, Predicting positions of people in human-robot conversational groups. HRI ’22: Proceedings of the 2022 ACM/IEEE International Conference on Human-Robot Interaction(2022). DOI: 10.5555/3523760.3523815. dl.acm.org/doi/abs/10.5555/3523760.3523815

Hooman Hedayati et al, REFORM: Recognizing F-formations for Social Robots, 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (2021). DOI: 10.1109/IROS45743.2020.9340708

© 2022 Science X Network

Citation:

A new approach that could improve how robots interact in conversational groups (2022, April 4)

retrieved 4 April 2022

from https://techxplore.com/news/2022-04-approach-robots-interact-conversational-groups.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.