AI will help phone photos surpass the DSLR, says Qualcomm

Today’s best smartphones are photography and videography beasts. Typical high-end devices sport multiple rear cameras across various zoom factors, a ton of horsepower for processing images and videos, and comprehensive camera apps with loads of modes.

We’ve already familiar with technologies like 10x periscope cameras, 8K video recording, advanced object-erasing smarts, and more. What’s next for the industry though? We spoke to Judd Heape, vice-president of product management for cameras at Qualcomm, to look at the future of smartphone photography.

AI to be the foundation for the future?

Eric Zeman / Android Authority

Smartphone photography has massively harnessed machine learning over the years; it’s used for tasks like noise reduction, object/shadow/reflection removal, reducing video judder, and more. This is clearly going to remain one of the biggest focus areas for smartphone brands and chipmakers alike in the next few years, and Heape feels it will actually be the biggest single focus area. He also outlines a few ways that AI will step up in the coming years.

Going forward in the future, we see a lot more AI capability to understand the scene, to understand the difference between skin and hair, and fabric and background and that sort of thing. And all those pixels being handled differently in real-time, not just post-processing a couple of seconds after the snapshot is taken but in real-time during like a camcorder video shoot.

Fortunately, we’re already seeing advanced, AI-driven image processing running on videos or at video-like frame rates. For example, some phone brands offer real-time viewfinder previews when using features like night modes, while Google uses its HDRNet neural network for video HDR.

AI is one of the biggest developments in the camera space, and Qualcomm camera bigwig Judd Heape thinks it could handle the entire image capture process in the future.

The use of AI has come some way from the modes first introduced in 2018, but Heape explains that AI in photography can be divided into four stages.

The first stage is pretty basic; AI is used to understand a specific thing in an image or scene. The second stage sees AI control the so-called 3A features, namely auto-focus, auto-white balance, and auto-exposure adjustments. The Qualcomm engineer reckons that the industry is currently at the third stage of the AI photography game, where AI is used to understand the different segments or elements of the scene.

We’re three to five years away from reaching the holy grail of AI photography.

This does indeed appear to be the case right now, as technologies like semantic segmentation and facial/eye recognition leverage AI to recognize specific subjects/objects in a scene and adjust them accordingly. For example, today’s phones can recognize a face and make sure it’s properly exposed, or recognize that the horizon is skewed and suggest you hold the phone properly.

Guide: The best camera phones you can get today

What about the fourth stage? Heape says we’re roughly three to five years away from reaching the holy grail, which will see AI processing the entire image:

Imagine a world from the future where you’d say ‘I want the picture to look like this National Geographic scene,’ and the AI engine would say ‘okay, I’m going to adjust the colors and the texture and the white balance and everything to look like and feel like this image you just showed me’.

In fact, we’ve seen glimpses of this future with LG phones and the Graphy app in the late 2010s. This app for LG phones allowed you to choose a model photo, with the camera app then adjusting settings like exposure, white balance, and shutter speed to capture a similar result. But presumably, Qualcomm’s vision for this future would entail more granular adjustments to truly capture the look and feel of the desired image.

Could smartphones overtake DSLR cameras?

Eric Zeman / Android Authority

Sony made some interesting claims earlier this year when an executive predicted that photos from smartphones would surpass images from DSLR cameras within the next few years. It’s easy to imagine, given the progress we’ve seen from smartphones by harnessing superior processing. But phones still have smaller components due to the thinner form factor compared to DSLRs.

It’s something that Heape acknowledges, although he still reckons that smartphones will move past dedicated cameras. “In terms of getting towards the image quality of a DSLR, yes. I think the image sensor is there, I think the amount of innovation that’s going into mobile image sensors is probably faster and more advanced than what’s happening in the rest of the industry.”

He further elaborated on the processing power available inside smartphones as a significant advantage over DSLR cameras:

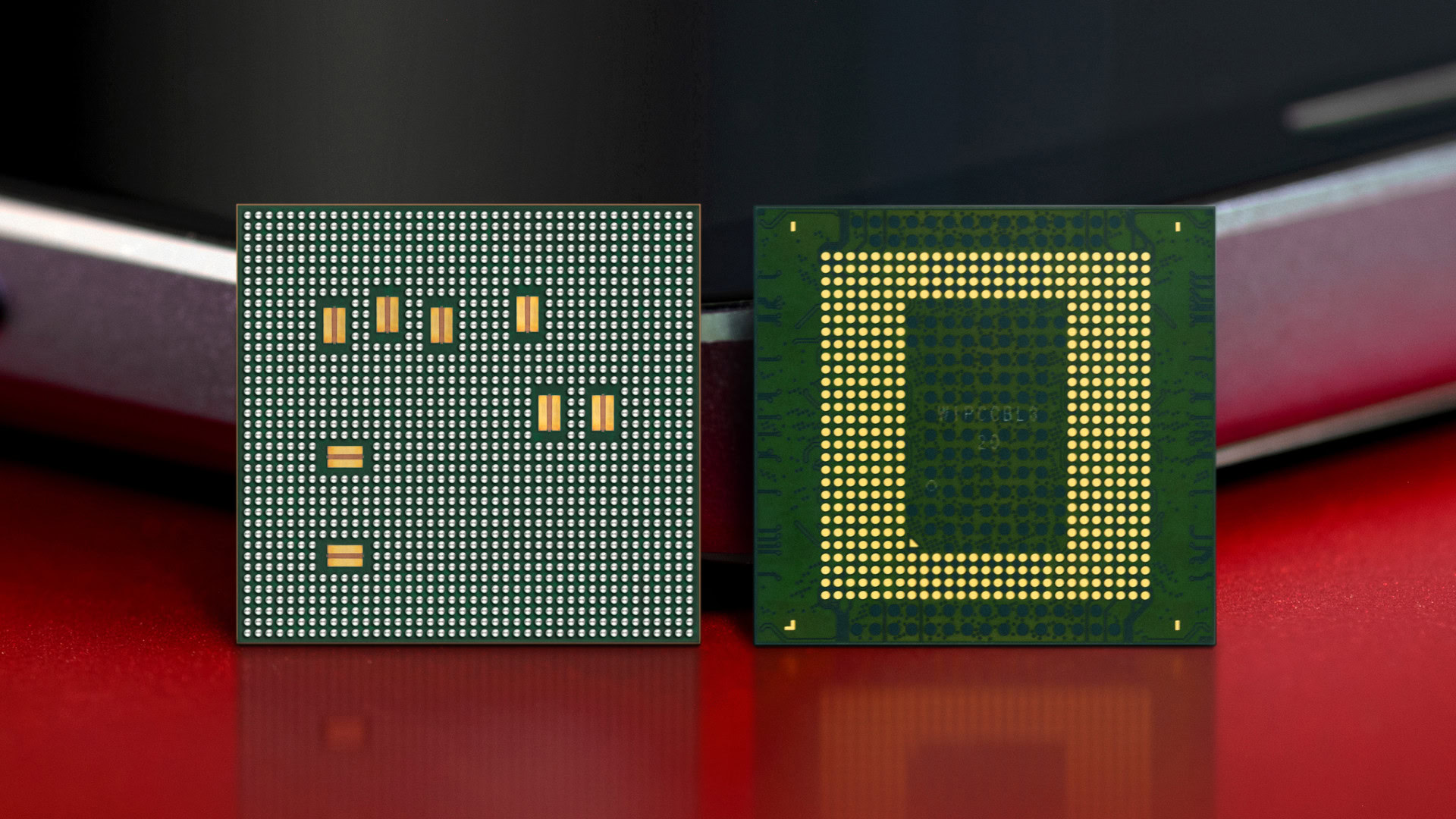

The processing in Snapdragon is 10 times better than what you can find on the biggest and baddest Nikon and Canon cameras. And that’s why we’re able to really push the barrier on image quality. Because even though we have a small lens and small image sensor, we’re doing many, many times more processing than what’s even capable in a DSLR.

It’s hard to argue otherwise, as the pace of smartphone chip development means we’re still seeing big performance and efficiency gains these days when it comes to camera-related tasks. For example, the Snapdragon 865 series delivered unlimited 960fps slow-motion, the Snapdragon 8 Gen 1 offers 8K HDR video, and the Snapdragon 888 series introduced simultaneous 4K HDR recording via three cameras. Throw in ever-improving multi-frame processing and smartphones are pushing camera boundaries in impressive ways.

Megapixels versus sensor size

Sony’s DSLR/smartphone prediction comes as the company introduces one-inch sensors to smartphones. We saw the first sensor land on the 20MP Sharp Aquos R6 and 12MP Xperia Pro-I last year, while Xiaomi and Sony also worked together to bring a brand-new 50MP IMX989 one-inch sensor to the Xiaomi 12S Ultra.

But this isn’t the only approach we’ve seen in the smartphone arena. The megapixel war has quietly reignited in the last few years. In 2018 and 2019, 48MP cameras were a big deal, but we’ve since seen 108MP cameras become a common fixture. And the war continued recently with the reveal of the first phone with a 200MP camera. Rumors persist that Samsung could offer a 200MP camera inside next year’s Galaxy S23 Ultra.

We’ve seen a new chapter open in the years-long sensor size versus megapixel war.

Qualcomm’s arch-rival Mediatek already supports 320MP cameras on the Dimensity 9000 series of chips, and Heape thinks this resolution could be the next hopping-off point for the industry. “320MP probably is the next place we’re going to stop,” the Qualcomm representative explained.

There is clearly room for sensors with plenty of megapixels and sensors with bigger pixels, as companies like Samsung and Xiaomi take both approaches. Heape still has a preference, though:

My philosophy is maybe different than some people in the industry where I don’t necessarily think we need hundreds and hundreds of megapixels. I think we need bigger pixels, right? We need to approach the DSLR and maybe take the sweet spot of around 40 or 50MP rather than going 200MP and 300MP. Although there are pushes in the industry to go in both directions.

You have to wonder where we’ll stop in terms of sensor size, given the slim form factor of smartphones. Heape suggests that innovative technologies such as anamorphic lenses could help drive further size increases, but it’ll be a tough ask in the short term.

In the direct near term, no, I don’t see us going above one inch. But in the future, yes, we can probably get there.

The future of specialized hardware and video

Today’s smartphones also pack dedicated silicon for specific imaging tasks. Sure, you’ve got your image signal processor, as you’d expect. But we’ve also seen chipmakers introducing bokeh engines for depth, as well as hardware for facial detection. It seems inevitable that another task or scenario will soon require a standalone piece of silicon too.

Heape explains that video is currently the biggest focus area for hardware accelerators, pointing to the aforementioned bokeh engine as well as computer vision hardware. But he also teased another addition in future products.

We will have announcements very soon where we’re gonna have dedicated hardware to handle, like I said, different parts of the scene. Hardware to know what to do for pixels that are skin, versus hair, versus fabric, versus sky, versus grass, versus background. Those are the areas — and again those all apply to video — where we really see the need to add specific hardware.

For what it’s worth, semantic image segmentation already allows a smartphone camera to identify various aspects of the image. But this all takes place at a more general level, identifying a sunset, faces, animals, buildings, and flowers, and adjusting camera app settings accordingly. But it certainly sounds like Qualcomm’s upcoming solution will dive deeper, applying camera tweaks on a more detailed level. This could also theoretically be a boon for augmented reality applications by allowing for more granular filters and other effects, but we’ll have to wait for news on this solution.

We’ve seen a rise in hardware dedicated to specific imaging tasks, but video could be the next frontier in this regard.

More broadly, we’ve seen video quality make big strides over the past few years too, but it seems doubtful that we’ll see 8K/60fps soon, given that 8K video in general still seems so niche to start with. Heape agrees, suggesting that 8K/30fps is fine for now.

I think we’ve got the resolution and frame rate to a point now where it won’t change for a while. We’re already shooting in 8K/30. We might go to 8K/60, but it’ll be a few years. It’s a tremendous amount of throughput and frankly what we bump into is power consumption limitations as we go higher and higher.

If anything, we expect more refinements to come for 8K video and below. After all, Qualcomm introduced 8K HDR with the Snapdragon 8 Gen 1, while recent innovations like super-stable video, AI-powered low-light video, and night hyperlapses take place at 4K or 1080p. We got a taste of this with Apple’s iPhone 14 series, bringing Cinematic video recording at 4K and super-stable video at “2.8K.”

Heape reckons that there is scope for improvements such as better “handling of motion and understanding motion in a scene.” He points to goals like handling motion in videos without ghosting and tackling motion and noise at the same time. “All these basic image quality principles that we tackled using multi-frame techniques in snapshot, we need to handle in real-time for 8K/30 video.”

More video advances: How Vivo and Zeiss are building smartphone cameras for the TikTok generation

Don’t bank on quality under-display selfie cameras

Hadlee Simons / Android Authority

We reviewed a couple of phones with under-display selfie cameras in the last year or two, and it’s clear that even the latest solutions can’t hold a candle to conventional cameras. In fact, we pitted the ZTE Axon 40 Ultra against mid-range phones a few weeks ago, finding that even a mid-range phone from 2018 could beat ZTE’s under-display tech.

Needless to say, we don’t recommend people buy a phone with an under-display camera unless selfies aren’t important to you at all.

Under-display cameras may eventually look reasonable, but that’s about it.

“I understand people just want a very sleek, wonderful looking end-to-end display with nothing hindering it. But for people who really want to do good photography, I don’t think it’s the direction we should be going in,” Heape asserts, pointing to diffusion problems, color issues, and “weird” artifacts at night.

Related: Even the best under-display camera phones are garbage, here’s the proof

“But the things we’re achieving in photography now — really good zoom, understanding of the scene, segmentation, depth, good 3A processing — all of this just gets worse if you try to put the camera underneath a bunch of pixels.”

We aren’t confident that under-display cameras will truly take the fight to conventional selfie cameras on high-end phones. Heape isn’t holding his breath either:

So will we get it [under-display cameras – ed] good enough to where a typical selfie will look reasonable? Yeah, but anything beyond that is going to be really, really hard.

Ditching the smartphone zoom camera?

Robert Triggs / Android Authority

Telephoto cameras have been a fixture on smartphones dating back to 2016, with modern optical zooms capped at a long range but fixed 10x. Sony has also innovated in this area with the Xperia 1 IV, introducing a variable telephoto camera capable of shooting at a variety of native zoom factors. This means you don’t need two separate telephoto/periscope cameras. But Heape reckons it could also result in future phones ditching the dedicated telephoto camera altogether in favor of a main camera that doubles as a tele lens.

I think the coolest thing about that is that it has the potential to reduce the complexity of the camera system. Whereas right now you need three cameras, like a wide, ultrawide, and telephoto. If you can actually have a true optical zoom, maybe you can combine the wide and telephoto into one camera, and just have two cameras maybe and simplify the camera system, lower the power consumption, etc.

Early rumors point to a 2023 phone like the Galaxy S23 Ultra maintaining the same camera setup as the S22 Ultra (i.e. two telephoto cameras, a main camera, and an ultrawide shooter). But could we see any players take this route soon?

“So yes, I see this as something that will happen in the next year, I think a lot of OEMs will move this way,” Heape explained.

This could be a pretty significant development for the smartphone industry, but we do wonder if this is a slam-dunk move. After all, variable telephoto components occupy a similarly large amount of internal space as a periscope camera. So companies hoping to pair a variable main/short-range tele camera with a long-range periscope sensor might run into challenges here.

Smartphone cameras will keep evolving

Robert Triggs / Android Authority

There have been many exciting developments in the smartphone camera space in the last few years. In terms of hardware, we’ve seen large sensors become commonplace, custom imaging chips, impressive zoom camera systems, and a ton of processing grunt. But we’ve also seen fantastic software improvements, such as super steady video modes, object erasing, improved HDR, simultaneous video capture streams, and more.

The next few years are also looking bright in terms of innovation in this space. Between ever-growing sensor sizes and megapixel counts, a potential reduction in cameras due to variable telephoto tech, AI handling more camera tasks, and more dedicated hardware, there’s still plenty to get excited about with the next generation of smartphone cameras.

What are you looking forward to from future phone cameras?

0 votes

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.