Features of virtual agents affect how humans mimic their facial expressions

In recent years, computer scientists have developed a broad variety of virtual agents, artificial agents designed to interact with humans or assist them with various tasks. Some past findings suggest that the extent to which human users trust these agents often depends on how much they perceive them to be likable and pleasant.

Psychology research suggests that when humans like each other or find each other pleasant they tend to mimic each other’s movements and facial expressions. They could thus potentially exhibit the same facial mimicking behavior when they find a robot or virtual agent particularly likable.

Researchers at Uppsala University, University of Potsdam, Sorbonne Université and other institutes worldwide have recently carried out a study exploring the extent to which specific features of an artificial agent might affect how much human users mimic its facial expressions. Their paper, pre-published on arXiv, specifically examined the impact of two key features, namely an agent’s embodiment and the extent to which it resembles humans.

In their experiments, the researchers asked 45 participants to complete an emotion recognition task while interacting with three virtual agents, namely a physical Furhat robot, a video-recorded Furhat robot and a fully virtual agent. In the first phase of the experiment, participants were asked to simply observe the agent’s facial expressions and state which of the six basic emotions they conveyed. In the second stage, they were asked to observe the agent’s facial expressions and mimic them as closely as possible.

The researchers then analyzed the data collected in the first phase of the experiment to determine the extent to which participants spontaneously mimicked the artificial agent’s facial expressions, and the data collected in the second phase to understand how well they mimicked these expressions when they were explicitly asked to do so.

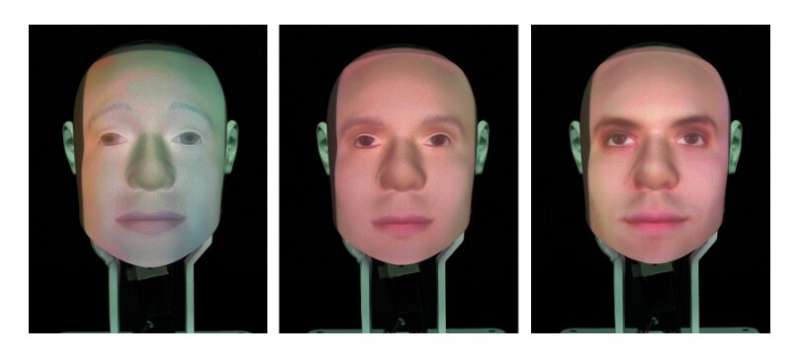

“Participants were randomly assigned to one level of human-likeness (between-subject variable: humanlike, characterlike or morph facial texture of the artificial agents) and observed the facial expressions displayed by a human (control) and three artificial agents differing in embodiment (within-subject variable: video-recorded robot, physical robot and virtual agent),” the researchers explained in their paper. “Contrary to our hypotheses, our results disclose that the agent that was perceived as the least uncanny, and most anthropomorphic, likable and co-present was the one spontaneously mimicked the least.”

Interestingly, the researchers found that participants were more likely to spontaneously mimic the facial expressions of virtual agents that were less likable. This finding is in stark contrast with past psychology findings associated with facial mimicry in human-human interactions.

In addition, the team found that instructed facial mimicry negatively predicted spontaneous facial mimicry. In other words, the more closely participants mimicked the facial expressions of agents when they were asked to do so, the less likely they were to mimic the agents spontaneously.

“If people are better able to mimic all the temporal dynamics of a target facial expression, they might also be more capable of recognizing that target emotion,” the researchers wrote in their paper. “In this sense, as opposed to spontaneous facial mimicry, instructed facial mimicry might signal a better understanding of the emotion.”

In their paper, the researchers hypothesize that specifically asking participants to recognize the emotions of virtual agents might change the usual patterns in facial mimicry reported by past psychology studies. In other words, participants might have been more prone to spontaneously mimic the expressions of less likable agents because they found them harder to interpret.

“Further work is needed to corroborate this hypothesis,” the researchers added. “Nevertheless, our findings shed light on the functioning of human-agent and human-robot mimicry in emotion recognition tasks and help us to unravel the relationship between facial mimicry, liking and rapport.”

Does the goal matter? Emotion recognition tasks can change the social value of facial mimicry towards artificial agents. arXiv:2105.02098 [cs.HC]. arxiv.org/abs/2105.02098

© 2021 Science X Network

Citation:

Features of virtual agents affect how humans mimic their facial expressions (2021, June 17)

retrieved 17 June 2021

from https://techxplore.com/news/2021-06-features-virtual-agents-affect-humans.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.