Hey Siri: Virtual Assistants Are Listening to Children and Using the Data

In many busy households around the world, it’s not uncommon for children to shout out directives to Apple’s Siri or Amazon’s Alexa. They may make a game out of asking the voice-activated personal assistant (VAPA) what time it is, or requesting a popular song. While this may seem like a mundane part of domestic life, there is much more going on. The VAPAs are continuously listening, recording and processing acoustic happenings in a process that has been dubbed “eavesmining,” a portmanteau of eavesdropping and datamining. This raises significant concerns pertaining to issues of privacy and surveillance, as well as discrimination, as the sonic traces of peoples’ lives become datafied and scrutinised by algorithms.

These concerns intensify as we apply them to children. Their data is accumulated over lifetimes in ways that go well beyond what was ever collected on their parents with far-reaching consequences that we haven’t even begun to understand.

Always listening

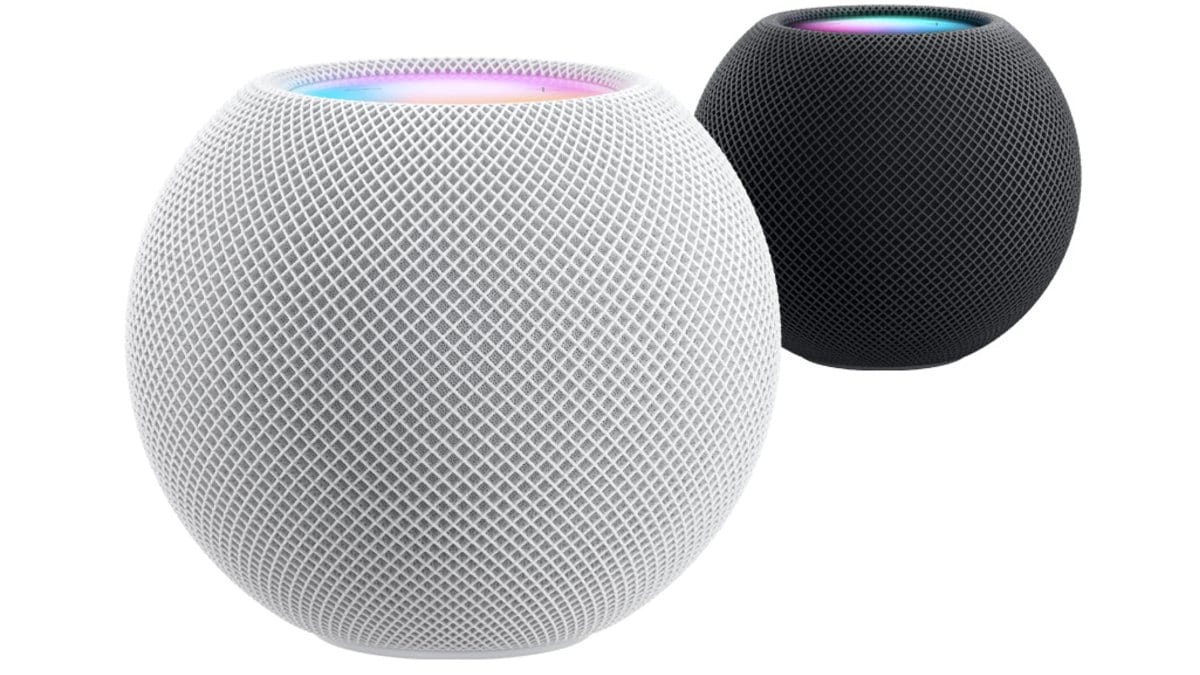

The adoption of VAPAs is proceeding at a staggering pace as it extends to include mobile phones, smart speakers and the ever-increasing number products that are connected to the Internet. These include children’s digital toys, home security systems that listen for break-ins, and smart doorbells that can pick up sidewalk conversations.

There are pressing issues that derive from the collection, storage and analysis of sonic data as they pertain to parents, youth, and children. Alarms have been raised in the past — in 2014, privacy advocates raised concerns about how much the Amazon Echo was listening to, what data was being collected and how the data would be used by Amazon’s recommendation engines.

And yet, despite these concerns, VAPAs and other eavesmining systems have spread exponentially. Recent market research predicts that by 2024, the number of voice-activated devices will explode to over 8.4 billion.

Recording more than just speech

There is more being gathered than just uttered statements, as VAPAs and other eavesmining systems overhear personal features of voices that involuntarily reveal biometric and behavioural attributes such as age, gender, health, intoxication, and personality.

Information about acoustic environments (like a noisy apartment) or particular sonic events (like breaking glass) can also be gleaned through “auditory scene analysis” to make judgments about what is happening in that environment.

Eavesmining systems already have a recent track record for collaborating with law enforcement agencies and being subpoenaed for data in criminal investigations. This raises concerns of other forms of surveillance creep and profiling of children and families.

For example, smart speaker data may be used to create profiles such as “noisy households,” “disciplinary parenting styles” or “troubled youth.” This could, in the future, be used by governments to profile those reliant on social assistance or families in crisis with potentially dire consequences.

There are also new eavesmining systems presented as a solution to keep children safe called “aggression detectors.” These technologies consist of microphone systems loaded with machine learning software, dubiously claiming that they can help anticipate incidents of violence by listening for signs of raising volume and emotions in voices, and for other sounds such as glass breaking.

Monitoring schools

Aggression detectors are advertised in school safety magazines and at law enforcement conventions. They have been deployed in public spaces, hospitals and high schools under the guise of being able to preempt and detect mass shootings and other cases of lethal violence.

But there are serious issues around the efficacy and reliability of these systems. One brand of detector repeatedly misinterpreted vocal cues of kids including coughing, screaming, and cheering as indicators of aggression. This begs the question of who is being protected and who will be made less safe by its design.

Some children and youth will be disproportionately harmed by this form of securitised listening, and the interests of all families will not be uniformly protected or served. A recurrent critique of voice-activated technology is that it reproduces cultural and racial biases by enforcing vocal norms and misrecognising culturally diverse forms of speech in relation to language, accent, dialect, and slang.

We can anticipate that the speech and voices of racialised children and youth will be disproportionately misinterpreted as aggressive sounding. This troubling prediction should come as no surprise as it follows the deeply entrenched colonial and white supremacist histories that consistently police a “sonic color line.” Sound policy Eavesmining is a rich site of information and surveillance as children and families’ sonic activities have become valuable sources of data to be collected, monitored, stored, analysed and sold without the subject’s knowledge to thousands of third parties. These companies are profit-driven, with few ethical obligations to children and their data.

With no legal requirement to erase this data, the data accumulates over children’s lifetimes, potentially lasting forever. It is unknown how long and how far-reaching these digital traces will follow children as they age, how widespread this data will be shared or how much this data will be cross-referenced with other data. These questions have serious implications on children’s lives both presently and as they age.

There are a myriad threats posed by eavesmining in terms of privacy, surveillance and discrimination. Individualised recommendations, such as informational privacy education and digital literacy training, will be ineffective in addressing these problems and place too great a responsibility on families to develop the necessary literacies to counter eavesmining in public and private spaces.

We need to consider the advancement of a collective framework that combats the unique risks and realities of eavesmining. Perhaps the development of a Fair Listening Practice Principles — an auditory spin on the “Fair Information Practice Principles” — would help evaluate the platforms and processes that impact the sonic lives of children and families.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.