Intel rolls out second-gen Loihi neuromorphic chip with big results in optimization problems | ZDNet

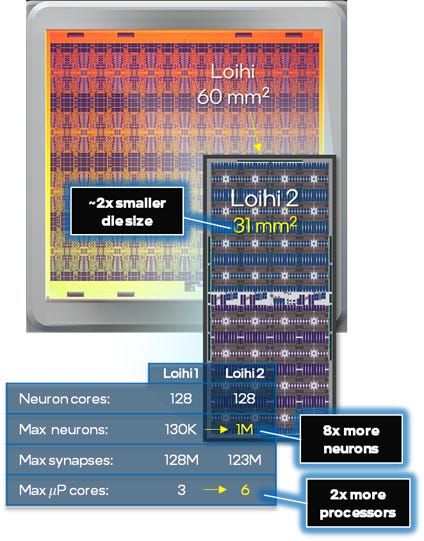

Loihi 2, pronounced “Low-EE-he,” cuts in half the size of the chip and multiplies eight-fold the number of artificial spiking neurons. Here, the exterior of the chip is seen with its contacts to connect to the circuit board.

Walden Kirsch/Intel

Intel on Thursday unveiled the second version of its Loihi neuromorphic chip, “Loihi 2,” a processor for artificial intelligence that it claims more aptly reflects the processes that occur in the human brain compared to other AI technology.

The new chip is shrunk in half in a more-advanced process node, now measuring 31 square millimeters, yet it contains one million artificial spiking neurons, eight times as many as its predecessor.

And the chip gains new flexibility by having more more extensive micro-code underlying the operation of the spiking neurons.

Loihi, pronounced “low-EE-he,” is named for a Hawaiian sea mount, a young volcano, “that is emerging from the sea anytime now,” as Mike Davies, Intel’s director of neuromorphic computing, puts it.

The new chip also gets a new development framework, called Lava, which is written in Java and released as open source. Developers can working on programs for Loihi without having access to the hardware. The software is available on Github.

Intel unveiled the first version of Loihi back in 2017, describing it at the time as a chip that would “draw inspiration from how neurons communicate and learn, using spikes and plastic synapses that can be modulated based on timing.”

The chip is fashioned as a “mesh” of compute cores

The first Loihi was fashioned using Intel’s 14-nanometer process technology. The new version uses Intel’s Intel 4 technology, as the company has rebranded its manufacturing generations. That is believed to be basically a 7-nanometer chip, though Intel hasn’t disclosed full details of the process technology.

The chip gains a more-flexible microcode that lets programmers “allocate variables and execute a wide range of instructions organized as short programs using an assembly language,” Intel states.

These programs have access to neural state memory, the accumulated synaptic input ai for the present timestep, random bits for stochastic models, and a timestep counter for time-gated computation. The instruction set supports conditional branching, bitwise logic, and fixed- point arithmetic backed by hardware multipliers.

“It adds a lot of generality, programmability, whereas previously we were limited by the fixed feature sets in Loihi 1,” said Intel’s Davies in an interview with ZDNet by phone.

Intel has been improving performance at many levels with the chip, he said.

“We have workloads that will run over ten times faster, and at the circuit level, we are somewhere between two to seven times, depending on the parameter.” Intel has also boosted the “chip-to-chip scalability” by multiplying by four the bandwidth on each link between cores.

The chip is able to scale into three dimensions, said Davies, by using inter-chip links.

The chip now has nearly 150 research groups around the world using Loihi, said Davies.

The contention of neuromorphic computing advocates is that the approach more closely emulates the actual characteristics of the brain’s functioning, such as the great economy with which the brain transmits signals.

The field of deep learning, which takes a different approach to AI, has criticized neuromorphic approaches for having achieved no practical results, in contrast to deep learning systems such as ResNet that can pick out images in pictures. Facebook’s head of AI, Yann LeCun, in 2019 dismissed the neuromorphic approach at a conference where he and Davies both spoke.

A photograph of the mesh of compute cores in Loihi 2.

Intel

In a paper published earlier this year in IEEE Spectrum, Davies and colleagues brought evidence for what they say are demonstrable benefits of neuromorphic chips on a variety of problems. As they put it in the paper, neuromorphic chips gain advantages when there are elements in a problem such as recursion, which they link to the way the brain processes:

While conventional feedforward deep neural networks show modest if any benefit on Loihi, more brain-inspired networks using recurrence, precise spike-timing relationships, synaptic plas- ticity, stochasticity, and sparsity perform certain computation with orders of magnitude lower latency and energy compared to state-of-the-art conventional approaches. These compelling neuromorphic networks solve a diverse range of problems representative of brain-like computation, such as event-base

“We have a portfolio of results that for the first time really quantitatively confirm the promise of neuromorphic hardware to deliver significant gains in energy efficiency, in the latency of processing, and the data efficiency of certain learning algorithms,” Davies told ZDNet.

“It’s been one of the really pleasant surprises to find that neuromorphic chips are fantastic at solving optimization problems,” said Davies. “It’s not that surprising because brains are always optimizing,” he said.

In deep learning forms of machine learning, the process of making predictions, doing inference, is a strictly “feed-forward process,” said Davies. In contrast, said Davies, “the brain is a much more complex process than that, it’s always factoring feedback, it’s understanding of context and expectation, into its inferencing.”

Intel’s Loihi team describes benchmark results against conventional deep learning approaches. The Loihi entries are the three networks listed as “SLAYER,” standing for “Spike Layer Error Reassignment,” a tool developed in 2018 by scientists at the National University of Singapore to allow neuromorphic spikes to be trained with back-propagation.

Intel

As a result, “The brain is actually performing optimization,” he said. “As we’ve abstracted some of those capabilities in a standard mathematical framework, we’ve found we can solve problems like QUBO, quadratic unconstrained primary optimization,” a class of problems that been performed in quantum computers.

“We’ve found we can actually solve QUBO problems fantastically well,” he said.

One early user of Loihi 2, the U.S. Department of Energy’s Los Alamos National Laboratory, relate having been able to establish connections across multiple domains with the chip. For example, Loihi his uncovering cross-currents in machine learning and quantum computing, according to Los Alamos staff scientist Dr. Gerd J. Kunde:

Investigators at Los Alamos National Laboratory have been using the Loihi neuromorphic platform to investigate the trade-offs between quantum and neuromorphic computing, as well as implementing learning processes on-chip. This research has shown some exciting equivalences between spiking neural networks and quantum annealing approaches for solving hard optimization problems. We have also demonstrated that the backpropagation algorithm, a foundational building block for training neural networks and previously believed not to be implementable on neuromorphic architectures, can be realized efficiently on Loihi. Our team is excited to continue this research with the second generation Loihi 2 chip.

In a forthcoming paper submitted to IEEE Spectrum, Davies and team describe experiments with Loihi 2 in which they compared the chip on standard machine learning tasks, such as the Google Speech Commands data set, by training a multi-layer perceptron model, and compared it to the best-in-class deep network, a model built by Google researchers and reported on last year.

The Loihi entries used a tool called “SLAYER,” standing for “Spike Layer Error Reassignment,” a program introduced in 2018 by Sumit Bam Shrestha and Garrick Orchardat of the National University of Singapore to allow neuromorphic spikes to be trained with back-propagation.

One model, which bakes in convolutions, achieved an accuracy on that test of 91.74%, which is better than the standard deep network, but using far fewer parameters.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.