OpenAI releases Point-E, which is like DALL-E but for 3D modeling

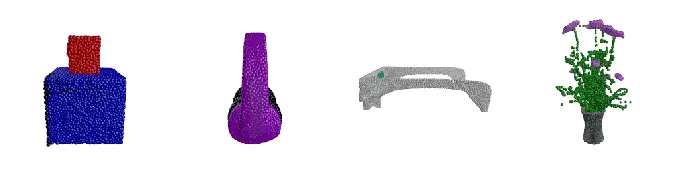

OpenAI, the Elon Musk-founded artificial intelligence startup behind popular DALL-E text-to-image generator, announced on Tuesday the release of its newest picture-making machine POINT-E, which can produce 3D point clouds directly from text prompts. Whereas existing systems like Google’s DreamFusion typically require multiple hours — and GPUs — to generate their images, Point-E only needs one GPU and a minute or two.

OpenAI

3D modeling is used across a variety industries and applications. The CGI effects of modern movie blockbusters, video games, VR and AR, NASA’s moon crater mapping missions, Google’s heritage site preservation projects, and Meta’s vision for the Metaverse all hinge on 3D modeling capabilities. However, creating photorealistic 3D images is still a resource and time consuming process, despite NVIDIA’s work to automate object generation and Epic Game’s RealityCapture mobile app, which allows anyone with an iOS phone to scan real-world objects as 3D images.

Text-to-Image systems like OpenAI’s DALL-E 2 and Craiyon, DeepAI, Prisma Lab’s Lensa, or HuggingFace’s Stable Diffusion, have rapidly gained popularity, notoriety and infamy in recent years. Text-to-3D is an offshoot of that research. Point-E, unlike similar systems, “leverages a large corpus of (text, image) pairs, allowing it to follow diverse and complex prompts, while our image-to-3D model is trained on a smaller dataset of (image, 3D) pairs,” the OpenAI research team led by Alex Nichol wrote in Point·E: A System for Generating 3D Point Clouds from Complex Prompts, published last week. “To produce a 3D object from a text prompt, we first sample an image using the text-to-image model, and then sample a 3D object conditioned on the sampled image. Both of these steps can be performed in a number of seconds, and do not require expensive optimization procedures.”

OpenAI

If you were to input a text prompt, say, “A cat eating a burrito,” Point-E will first generate a synthetic view 3D rendering of said burrito-eating cat. It will then run that generated image through a series of diffusion models to create the 3D, RGB point cloud of the initial image — first producing a coarse 1,024-point cloud model, then a finer 4,096-point. “In practice, we assume that the image contains the relevant information from the text, and do not explicitly condition the point clouds on the text,” the research team points out.

These diffusion models were each trained on “millions” of 3d models, all converted into a standardized format. “While our method performs worse on this evaluation than state-of-the-art techniques,” the team concedes, “it produces samples in a small fraction of the time.” If you’d like to try it out for yourself, OpenAI has posted the projects open-source code on Github.

All products recommended by Engadget are selected by our editorial team, independent of our parent company. Some of our stories include affiliate links. If you buy something through one of these links, we may earn an affiliate commission. All prices are correct at the time of publishing.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.