Robot dog learns to walk in one hour

A newborn giraffe or foal must learn to walk on its legs as fast as possible to avoid predators. Animals are born with muscle coordination networks located in their spinal cord. However, learning the precise coordination of leg muscles and tendons takes some time. Initially, baby animals rely heavily on hard-wired spinal cord reflexes. While somewhat more basic, motor control reflexes help the animal to avoid falling and hurting themselves during their first walking attempts. The following, more advanced and precise muscle control must be practiced, until eventually the nervous system is well adapted to the young animal’s leg muscles and tendons. No more uncontrolled stumbling—the young animal can now keep up with the adults.

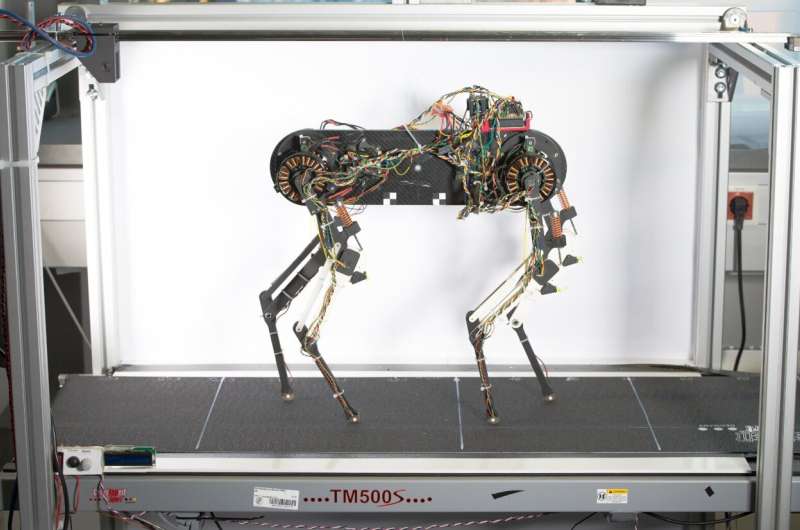

Researchers at the Max Planck Institute for Intelligent Systems (MPI-IS) in Stuttgart conducted a research study to find out how animals learn to walk and learn from stumbling. They built a four-legged, dog-sized robot, that helped them figure out the details.

“As engineers and roboticists, we sought the answer by building a robot that features reflexes just like an animal and learns from mistakes,” says Felix Ruppert, a former doctoral student in the Dynamic Locomotion research group at MPI-IS. “If an animal stumbles, is that a mistake? Not if it happens once. But if it stumbles frequently, it gives us a measure of how well the robot walks.”

Felix Ruppert is first author of “Learning Plastic Matching of Robot Dynamics in Closed-loop Central Pattern Generators,” which will be published July 18, 2022 in the journal Nature Machine Intelligence.

Learning algorithm optimizes virtual spinal cord

After learning to walk in just one hour, Ruppert’s robot makes good use of its complex leg mechanics. A Bayesian optimization algorithm guides the learning: the measured foot sensor information is matched with target data from the modeled virtual spinal cord running as a program in the robot’s computer. The robot learns to walk by continuously comparing sent and expected sensor information, running reflex loops, and adapting its motor control patterns.

The learning algorithm adapts control parameters of a Central Pattern Generator (CPG). In humans and animals, these central pattern generators are networks of neurons in the spinal cord that produce periodic muscle contractions without input from the brain. Central pattern generator networks aid the generation of rhythmic tasks such as walking, blinking or digestion. Furthermore, reflexes are involuntary motor control actions triggered by hard-coded neural pathways that connect sensors in the leg with the spinal cord.

As long as the young animal walks over a perfectly flat surface, CPGs can be sufficient to control the movement signals from the spinal cord. A small bump on the ground, however, changes the walk. Reflexes kick in and adjust the movement patterns to keep the animal from falling. These momentary changes in the movement signals are reversible, or “elastic,” and the movement patterns return to their original configuration after the disturbance. But if the animal does not stop stumbling over many cycles of movement—despite active reflexes—then the movement patterns must be relearned and made “plastic,” i.e., irreversible. In the newborn animal, CPGs are initially not yet adjusted well enough and the animal stumbles around, both on even or uneven terrain. But the animal rapidly learns how its CPGs and reflexes control leg muscles and tendons.

The same holds true for the Labrador-sized robot-dog named Morti. Even more, the robot optimizes its movement patterns faster than an animal, in about one hour. Morti’s CPG is simulated on a small and lightweight computer that controls the motion of the robot’s legs. This virtual spinal cord is placed on the quadruped robot’s back where the head would be. During the hour it takes for the robot to walk smoothly, sensor data from the robot’s feet are continuously compared with the expected touch-down predicted by the robot’s CPG. If the robot stumbles, the learning algorithm changes how far the legs swing back and forth, how fast the legs swing, and how long a leg is on the ground. The adjusted motion also affects how well the robot can utilize its compliant leg mechanics. During the learning process, the CPG sends adapted motor signals so that the robot henceforth stumbles less and optimizes its walking. In this framework, the virtual spinal cord has no explicit knowledge about the robot’s leg design, its motors and springs. Knowing nothing about the physics of the machine, it lacks a robot “model.”

“Our robot is practically ‘born’ knowing nothing about its leg anatomy or how they work,” Ruppert explains. “The CPG resembles a built-in automatic walking intelligence that nature provides and that we have transferred to the robot. The computer produces signals that control the legs’ motors, and the robot initially walks and stumbles. Data flows back from the sensors to the virtual spinal cord where sensor and CPG data are compared. If the sensor data does not match the expected data, the learning algorithm changes the walking behavior until the robot walks well, and without stumbling. Changing the CPG output while keeping reflexes active and monitoring the robot stumbling is a core part of the learning process.”

Energy efficient robot dog control

Morti’s computer draws only five watts of power in the process of walking. Industrial quadruped robots from prominent manufacturers, which have learned to run with the help of complex controllers, are much more power hungry. Their controllers are coded with the knowledge of the robot’s exact mass and body geometry—using a model of the robot. They typically draw several tens, up to several hundred watts of power. Both robot types run dynamically and efficiently, but the computational energy consumption is far lower in the Stuttgart model. It also provides important insights into animal anatomy.

“We can’t easily research the spinal cord of a living animal. But we can model one in the robot,” says Alexander Badri-Spröwitz, who co-authored the publication with Ruppert and heads the Dynamic Locomotion Research Group. “We know that these CPGs exist in many animals. We know that reflexes are embedded; but how can we combine both so that animals learn movements with reflexes and CPGs? This is fundamental research at the intersection between robotics and biology. The robotic model gives us answers to questions that biology alone can’t answer.”

Felix Ruppert, Learning plastic matching of robot dynamics in closed-loop central pattern generators, Nature Machine Intelligence (2022). DOI: 10.1038/s42256-022-00505-4. www.nature.com/articles/s42256-022-00505-4

Citation:

Robot dog learns to walk in one hour (2022, July 18)

retrieved 18 July 2022

from https://techxplore.com/news/2022-07-robot-dog-hour.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.