The AI edge chip market is on fire, kindled by ‘staggering’ VC funding | ZDNet

Chips to perform AI inference on edge devices such as smartphones is a red-hot market, even years into the field’s emergence, attracting more and more startups and more and more venture funding, according to a prominent chip analyst firm covering the field.

“There are more new startups continuing to come out, and continuing to try to differentiate,” says Mike Demler, Senior Analyst with The Linley Group, which publishes the widely read Microprocessor Report, in an interview with ZDNet via phone.

Linley Group produces two conferences each year in Silicon Valley hosting numerous startups, the Spring and Fall Processor Forum, with an emphasis in recent years on those AI startups.

At the most recent event, held in October, both virtually and in-person, in Santa Clara, California, the conference was packed with startups such Flex Logix, Hailo Technologies, Roviero, BrainChip, Syntiant, Untether AI, Expedera, and Deep AI giving short talks about their chip designs.

Demler and team regularly assemble a research report titled the Guide to Processors for Deep Learning, the latest version of which is expected out this month. “I count more than 60 chip vendors in this latest edition,” he told ZDNet.

EdgeCortix

Edge AI has become a blanket term that refers mostly to everything that is not in a data center, though it may include servers on the fringes of data centers. It ranges from smartphones to embedded devices that suck micro-watts of power using the TinyML framework for mobile AI from Google.

The middle part of that range, where chips consume from a few watts of power up to 75 watts, is an especially crowded part of the market, said Demler, usually in the form of a pluggable PCIe or M.2 card. (75 watts is the PCI-bus limit in devices.)

“PCIe cards are the hot segment of the market, for AI for industrial, for robotics, for traffic monitoring,” he explained. “You’ve seen companies such as Blaize, FlexLogic — lots of these companies are going after that segment.”

But really low-power is also quite active. “I’d say the tinyML segment is just as hot. There we have chips running from a few milliwatts to even microwatts.”

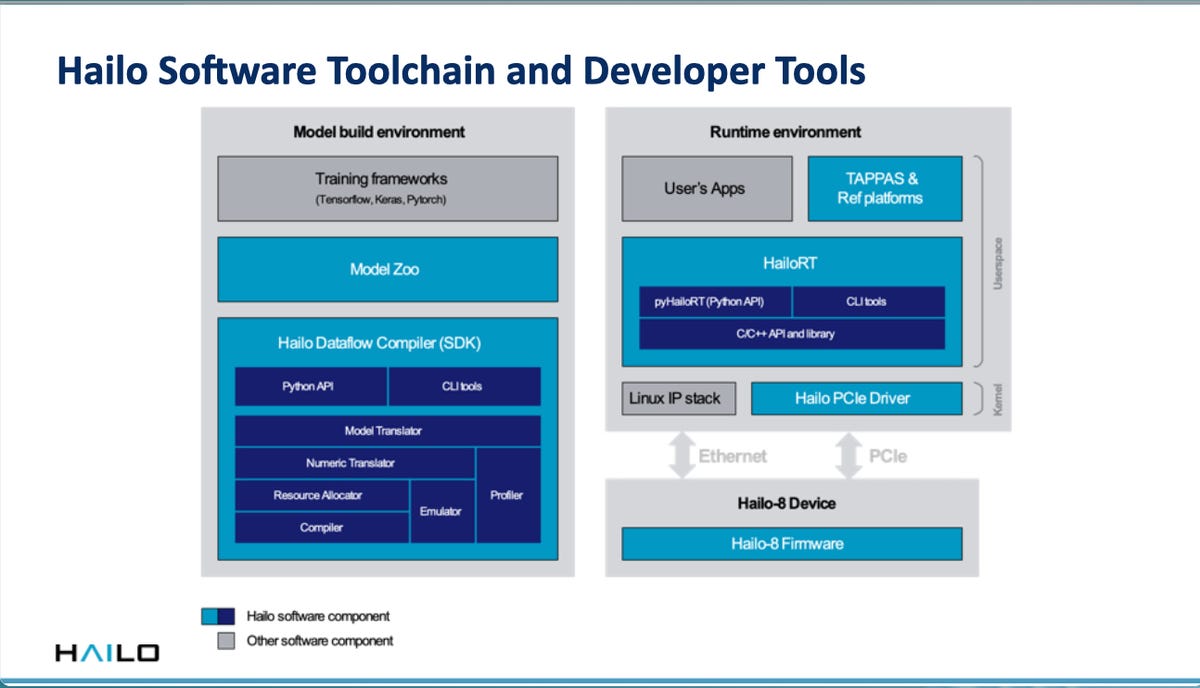

Hailo

Most of the devices are dedicated to the “inference” stage of AI, where artificial intelligence makes predictions based on new data.

Inference happens after a neural network program has been trained, meaning that its tunable parameters have been developed fully enough to reliably form predictions and the program can be put into service.

The initial challenge for the startups, said Demler, is to actually get from a nice PowerPoint slide show to working silicon. Many start out with a simulation of their chip running on a field-programmable gate array, and then either move to selling a finished system-on-chip (SoC), or else licensing their design as synthesizable IP that can be incorporated into a customer’s chip.

“We still see a lot of startups hedging their bets, or pursuing as many revenue models as they can,” said Demler, “by first demo’ing on an FPGA and offering their core IP for licensing.” Some startups also offer the FPGA-based version as a product.”

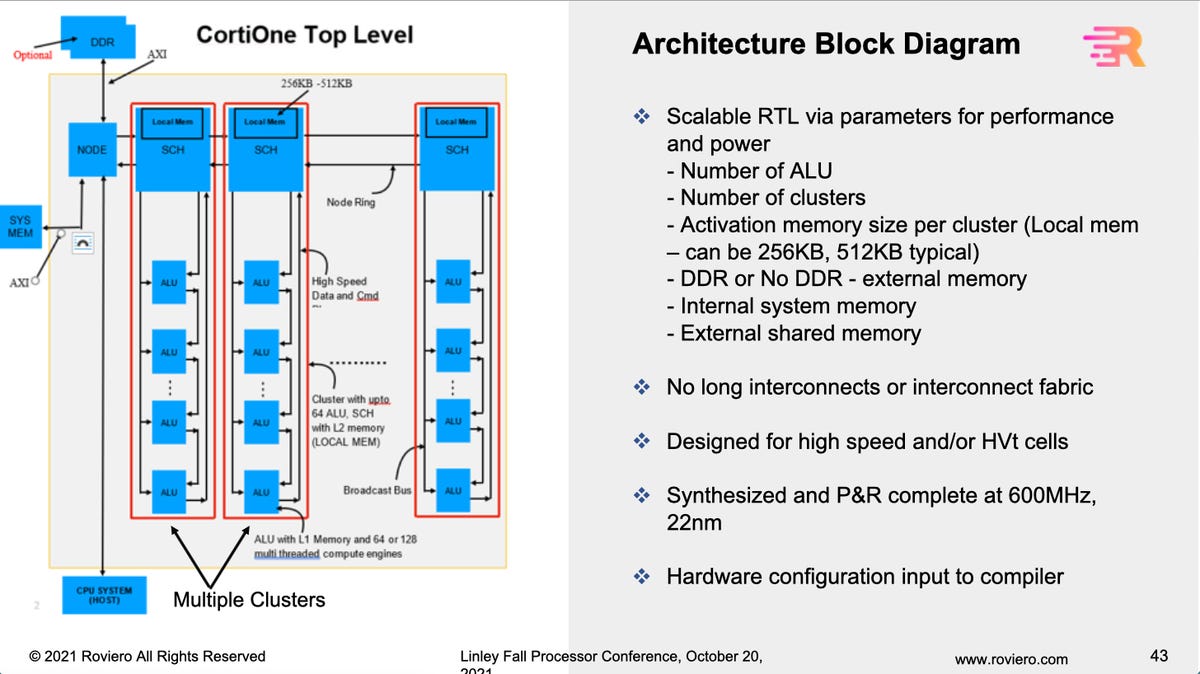

Roviero

With dozens of vendors in the market, even those that get to working silicon are challenged to show something that’s meaningfully different.

“It’s hard to come up with something that’s truly different,” said Demler. “I see these presentations, ‘world’s first,’ or, ‘world’s best,’ and I say, yeah, no, we’ve seen dozens.”

Some companies began with such a different approach that they set themselves apart early, but have taken some time to bear fruit.

BrainChip Holdings, of Sydney, Australia, with offices in Laguna Hills, California, got a very early start in 2011 with a chip to handle spiking neural networks, the neuromorphic approach to AI that purports to more closely model how the human brain functions.

The company has over the years showed off how its technology can perform tasks such as using machine vision to identify poker chips on the casino floor.

“BrainChip has been doggedly pursuing this spiking architecture,” said Demler. “It has a unique capability, it can truly learn on device,” thus performing both training and inference.

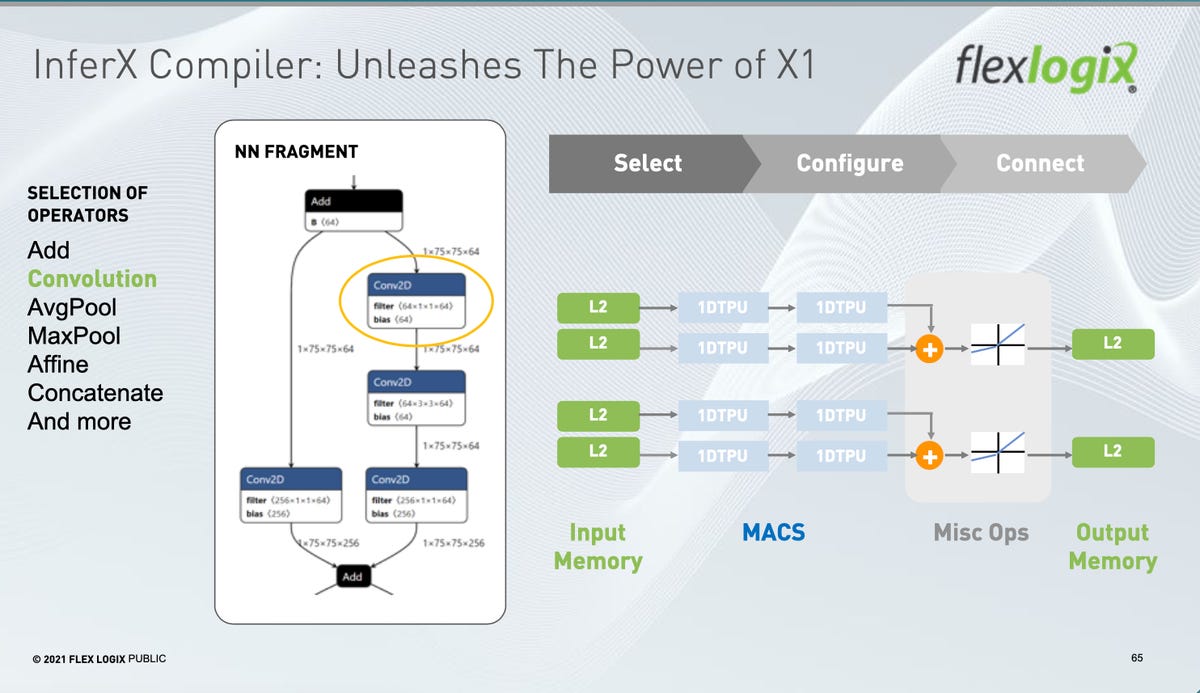

FlexLogix

BrainChip has in one sense come the farthest of any startup: it’s publicly traded. Its stock is listed on the Australian Stock Exchange under the ticker “BRN,” and last fall the company issued American Depository Shares to trade on the U.S. over-the-counter market, under the ticker “BCHPY.” Those shares have since more than tripled in value.

BrainChip is just starting to produce revenue. The company in October came out with mini PCIe boards of its “Akida” processor, for x86 and Raspberry Pi, and last month announced new PCIe boards for $499. The company in the December quarter had revenue of U.S.$1.1 million, up from $100,000 in the prior quarter. Total revenue for the year was $2.5 million, with an operating loss of $14 million.

Some other exotic approaches have proved hard to deliver in practice. Chip startup Mythic, founded in 2012 and based in Austin, Texas, has been pursuing the novel route of making some of its circuitry use analog chip technology, where instead of processing ones and zeros, it computes via manipulation of a real-valued wave form of an electrical signal.

“Mythic has generated a few chips but no design wins,” Demler observed.”Everyone agrees, theoretically, analog should have a power efficiency advantage, but getting there in something commercially variable is going to be much more difficult.”

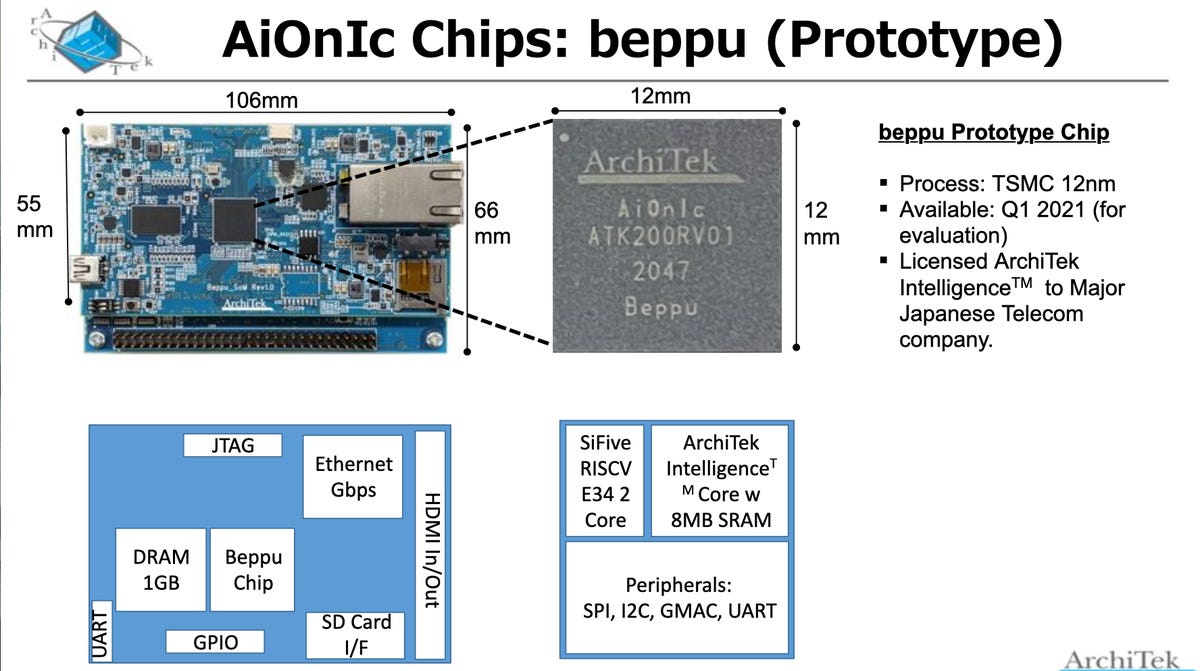

Architek

Another startup presenting at the Processor Conference, Syntiant, started out with an analog approach but decided analog didn’t provide sufficient power advantages and took longer to bring to market, noted Demler.

Syntiant of Irvine, California, founded in 2017, has focused on very simple object recognition that can operate with low power on nothing more than a feature phone or a hearable.

“On a feature phone, you don’t want an apps processor, so the Syntiant solution is perfect,” observed Demler.

Regardless of the success of any one startup, the utility of special circuitry means that AI acceleration will endure as a category of chip technology, said Demler.

“AI is becoming so ubiquitous in so many fields, including automotive, embedded processing, the IoT, mobile, PCs, cloud, etc., that including a special-purpose accelerator will become commonplace, just like GPUs are for graphics.”

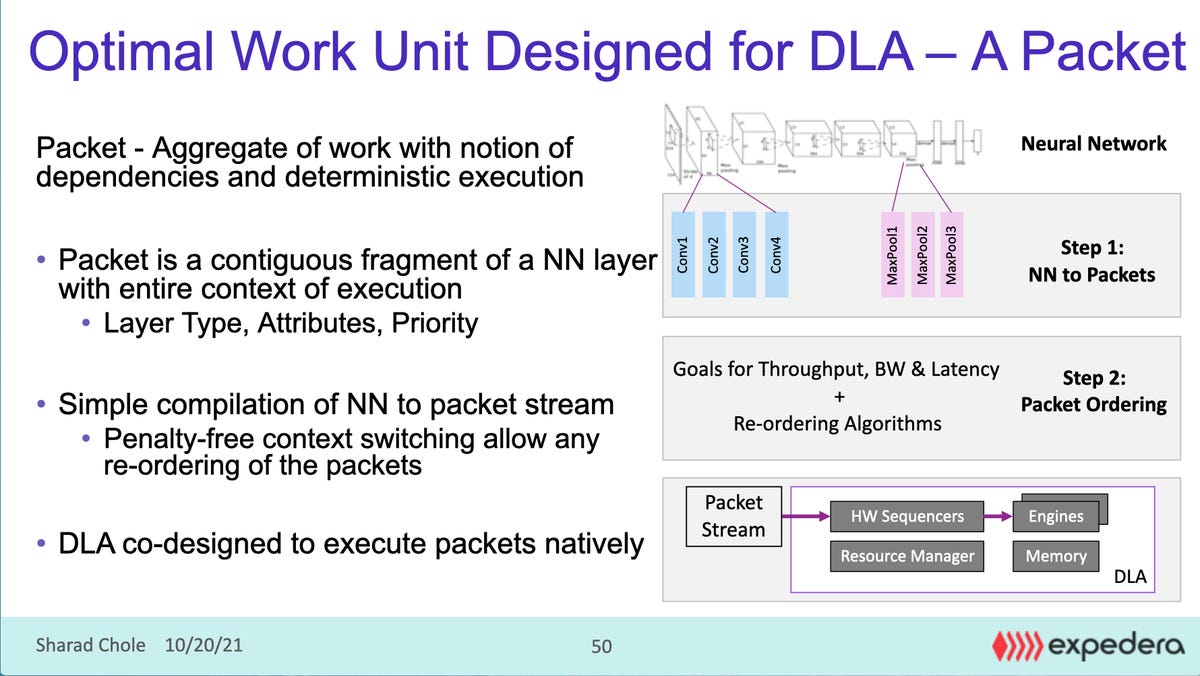

Expedera

Nevertheless, some tasks will be more efficient to run on a general-purpose CPU, DSP, or GPU, said Demler. That is why Intel and Nvidia and others are amplifying their architectures with special instructions, such as for vector handling.

Different approaches will continue to be explored as long as a venture capital market awash in cash lets a thousand flowers bloom.

“There’s still so much VC money out there, I’m astounded by the amount these companies continue to get,” said Demler.

Demler notes giant funding rounds for Sima.ai of San Jose, California, founded in 2018, which is developing what it calls an “MLSoC” focused on reducing power consumption. The company received $80 million in their Series B funding round.

Another one is Hailo Technologies of Tel Aviv, founded in 2017, which has raised $320.5 million, according to FactSet, including $100 million in its most recent round, and is supposedly valued at a billion dollars

“The figures coming out of China, if true, are even more staggering,” said Demler. Funding looks set to continue for the time being, he said. “Until the VC community decides there’s something else to invest in, you’re going to see these companies popping up everywhere.”

At some point, a shake-out will happen, but when that day may come is not clear.

“Some of them have to go away eventually,” mused Demler. “Whether it’s 3 years or 5 years from now, we’ll see much fewer companies in this space.”

The next conference event Demler and colleagues will host is late April, the Spring Processor Forum, at the Hyatt Regency Hotel in Santa Clara, but with live-streaming for those who can’t make it in person.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.