You’ve got to have heart: Computer scientist works to help AI comprehend human emotions

Bring up robot-human relations, and you’re bound to conjure images of famous futuristic robots, from the Terminator to C-3PO. But, in fact, the robot invasion has already begun. Devices and programs, including digital voice assistants, predictive text and household appliances are smart, and getting smarter. It doesn’t do, though, for computers to be all brain and no heart.

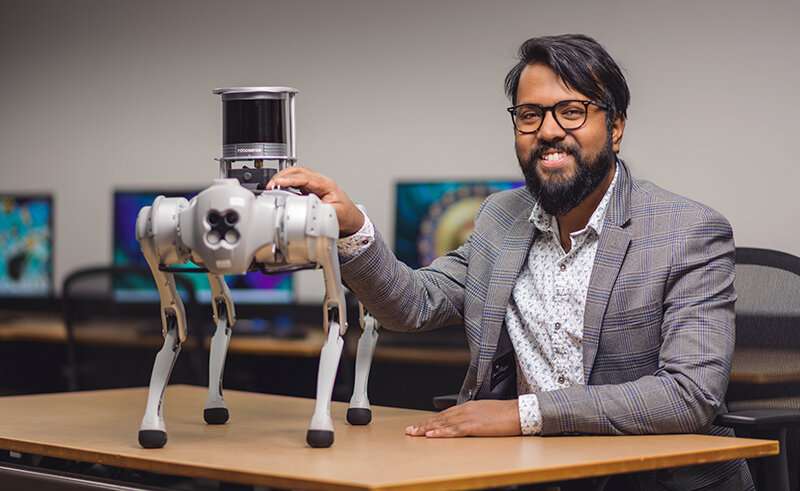

Computer scientist Aniket Bera, an associate professor of computer science in Purdue University’s College of Science, is working to make sure the future is a little more “Big Hero 6” and a little less Skynet. From therapy chatbots to intuitive assistant robots, to smart search and rescue drones, to computer modeling and graphics, his lab works to optimize computers for a human world.

“The goal of my research is to use AI to improve human life,” Bera said. “Humans, human behavior and human emotions are at the center of everything I do.”

Bera is an expert in the interdisciplinary field of affective computing: using machine learning and other computer science methods to program artificial intelligence programs to better incorporate and understand human behavior and emotion.

Artificial (emotional) intelligence

Computers are tools, and they’re only as good as we program them to be. When you ask Siri to play a song or Alexa to set a timer, they respond to the context of your words. But humans don’t communicate using only words: Tone of voice, context, posture and gestures all play a monumentally important role in human communication.

“When a friend asks you how you are, you can say, ‘I’m fine!’ in an upbeat tone, and it means something completely different than if you say, ‘I’m fine,’ like Eeyore,” Bera said. “Computers usually just pay attention to the content and ignore the context.”

That literality is fine for devices that are merely trying to help you with mundane tasks. However, if you are using AI for more complex purposes, the devices need a little more of Captain Kirk’s outlook and a little less of Spock’s. Bera is using his expertise in machine learning to program devices to incorporate an understanding of nonverbal cues and communication.

“We are trying to build AI models and systems that are more humanlike and more adept at interacting with humans,” Bera said. “If we can maximize AI’s ability to interpret and interact with humans, we can help more people more efficiently.”

Bera and his team are working on a multisensory approach to this “emotional” AI, which involves observing and analyzing facial expressions, body language, involuntary and subconscious movements and gestures, eye movements, speech patterns, intonations and different linguistic and cultural parameters. Training AI on these sorts of inputs not only improves communication, it also better equips the AI to respond to humans in a more appropriate and even emotive manner.

Bera explains that the United States and most of the world are undergoing a shortage of mental health professionals. Access to mental health can be difficult to find, and sessions can be tough to afford or to fit into a person’s busy schedule. Bera sees emotionally intelligent AI programs as tools that might be able to bridge the gap, and he is working with medical schools and hospitals to bring these ideas to fruition.

AI-informed therapy programs could help assess a person’s mental and emotional health and point them toward correct resources, as well as suggest some initial strategies to help. Talking to an AI for some people, especially those who are neurodivergent or have social anxiety, may be lower-stakes and easier than talking to a human. At the same time, having an AI assistant to take note of a person’s nonverbal communication and speech patterns can help human therapists track their patients’ progress between sessions and enable them to provide the best possible care.

Navigating emotional environments

Another area where enabling AI programs to better grasp human emotions is when robots share physical space with humans.

Self-driving cars can understand and interpret painted markers on the pavement, but they can’t identify human pedestrians and assess what they might do based on their movement.

A confused child, an angry adolescent or a panicking adult are all sights that might make a driver of a vehicle slow down and be more cautious than they might ordinarily be. The human driver knows intuitively that these are people who might make irrational or sudden moves and could put them at risk of collision with a vehicle.

For robots to be able to make that same conclusion using nonverbal and postural cues could help self-driving vehicles and other autonomous robots more safely navigate physical environments. Bera is working with collaborators on programming the brains of a wheeled robot called ProxEmo that can read humans’ body language to gauge their emotions.

In the future, similar protocols could help other types of robots assess which individuals in a crowd are confused or lost and help them quickly and efficiently.

Rescue rovers

Some physical environments—the scenes of natural disasters, battlefields and perilous environments—are too dangerous for humans. Historically, in those cases, humans have harnessed non-human partners, including coal mine canaries, rescue dogs and even bomb-sniffing rats, to go where no human can.

Of course, it would be even better if we could not risk any lives at all—not just spare the human ones. Bera and his team are working with commercially available drone models, like a four-footed robot “dog”—to create autonomous rovers that can, for example, search for survivors after an earthquake.

“Most people who die in the earthquake don’t die in the actual quake,” Bera said. “They die from being trapped in the rubble; they die because first responders couldn’t find them fast enough. A drone can scan the environment and crawl through debris to detect signs of life, including heartbeats, body heat and carbon dioxide, much more safely than even dogs can. Plus, there’s no risk to a living dog if we send in the robot dog.”

Understanding how humans move can help the robots navigate disaster scenes, stay out of the way of human first responders and help locate, reach and rescue survivors more efficiently than either humans or dogs could on their own. Think of Wall-E and EVE working together to restore the trashed planet Earth.

“The idea is to build a future where robots can be partners, can help humans accomplish goals and tasks more safely, more efficiently and more effectively,” Bera said. “In computer science, a lot of time the biggest problems are the humans. What our research does is put the human back into problem solving to build a better world.”

Citation:

You’ve got to have heart: Computer scientist works to help AI comprehend human emotions (2023, February 13)

retrieved 13 February 2023

from https://techxplore.com/news/2023-02-youve-heart-scientist-ai-comprehend.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.